The Ghost of a Decision Not Made

In 2005, shortly after taking the helm as Intel's chief executive, Paul Otellini walked into a board meeting with an audacious proposal. According to a New York Times report based on sources familiar with the boardroom discussion, Otellini suggested that Intel acquire a relatively small graphics chip company, Nvidia, for as much as $20 billion. Some Intel executives in that room recognized what others could not yet see, that Nvidia's parallel processing architecture might one day prove essential to data center computing. The board, however, balked. $20 billion would have been Intel's most expensive acquisition ever, and there were concerns about integrating the company and Intel's poor track record with acquisitions. Otellini backed off. One attendee at that meeting would later describe it as a fateful moment.

The magnitude of that fateful moment can now be measured with precision. As of early 2026, Nvidia's market capitalization exceeds $4.6 trillion, making it roughly twenty-five times more valuable than Intel, whose market cap collapsed to under $90 billion during 2024 before a partial recovery. Intel was removed from the Dow Jones Industrial Average in November 2024, replaced by Nvidia itself. The board that rejected Otellini's proposal instead backed an internal graphics project called Larrabee, led by Pat Gelsinger, then a senior vice president. That project was eventually cancelled. Gelsinger returned to Intel as CEO in 2021, tasked with engineering a turnaround, but was forced out by the board in December 2024 after directors concluded his ambitious plan was not working fast enough. The company he inherited was already reeling; the company he left behind was posting the largest quarterly loss in its fifty-six-year history. And in a twist that borders on the absurd, Nvidia announced in late 2025 that it would invest $5 billion in Intel—the acquirer that never was now receiving a capital lifeline from the company it could have owned for a fraction of that investment's current value.

This history matters not as a tale of corporate regret but as a framework for understanding the strategic choices Nvidia faces today. Jensen Huang's company now occupies the position Intel once held: dominant, seemingly unassailable, the essential infrastructure layer for a technological era. The question that haunted Intel's board in 2005: How do you deploy capital to ensure dominance persists?—is now the question Nvidia must answer, with far higher stakes.

The Architecture of Permanence in a World of Exponential Change

As 2026 begins, Nvidia is the world's most valuable company and faces critical decisions about how to deploy its growing wealth. With $60.6 billion in cash and short-term investments, and nearly $576 billion in projected free cash flow over the next three years, Nvidia has moved beyond traditional semiconductor manufacturing to become a central force in AI infrastructure. The challenge is no longer sustaining dominance, but making it permanent.

Intel's cautionary tale looms over every strategic decision. The Communications of the ACM, in a December 2024 analysis titled "Intel's Fall from Grace," observed that Nvidia now possesses something Intel never had, even at its peak: the combination of hardware lock-in and software ecosystem dominance that Intel and Microsoft together achieved with the "Wintel" partnership, but unified within a single company. The CUDA platform, developed since 2006, has over four million developers and deep integration with every major AI framework, creating switching costs that no competitor has overcome. For Nvidia, the imperative is clear: never become the company that rejects the $20 billion acquisition, never become the board that cannot see what the future requires.

NVIDIA Strategic Ecosystem

Non-exclusive licensing agreement for LPU technology

• Jonathan Ross & Sunny Madra lead operations

• Groq maintains independent licensing rights

Subpoenas issued September 2024

Concerns: tying agreements, market power

— CFO Colette Kress

• Humanoid robotics integration

• Autonomous vehicle systems

• CoWoS advanced packaging

• Essential supply chain dependency

The Cash Deployment Paradox: When Scale Becomes Its Own Constraint

Nvidia's financial position presents a paradox enviable to any other company but a genuine strategic challenge for a firm of its magnitude. Traditional avenues for deploying capital have narrowed considerably. Large-scale acquisitions, the conventional playbook for technology giants seeking to consolidate market position, have become essentially impossible. The failed $40 billion acquisition of Arm Holdings in 2020 demonstrated that regulators will intervene when Nvidia attempts to expand its footprint through conventional mergers, and the ongoing Department of Justice antitrust investigation has only heightened scrutiny of the company's market practices. The DOJ's subpoenas, issued as part of probes into both Nvidia's acquisition of Israeli AI management startup Run:AI and broader concerns about alleged tying agreements and preferential pricing for exclusive customers, have created an environment where every strategic move is examined through a regulatory lens.

Because of these limits, Nvidia has turned to creative deals that let it grow without drawing antitrust action. The $20 billion deal with Groq is a good example. Instead of buying Groq outright, Nvidia made a non-exclusive licensing agreement. This way, Nvidia gets Groq’s technology and most of its top talent—including founder Jonathan Ross, president Sunny Madra, and about 90% of Groq’s engineers—while leaving the company itself in place. Analyst Stacy Rasgon called this “the fiction of competition.” The deal helps Nvidia in two ways: it removes a rising competitor in inference chips and gives Nvidia access to Groq’s specialized Language Processing Unit technology, which is key for the next wave of AI computing.

The contrast with Intel's 2005 decision is instructive. Where Intel's board saw a $20 billion acquisition as prohibitively expensive and risky, Nvidia's leadership views a $20 billion licensing-and-talent deal as routine, announcing it on Christmas Eve without a press release. "They're so big now that they can do a $20 billion deal on Christmas Eve with no press release and nobody bats an eye," Rasgon observed. This is what it looks like when a company has learned the lesson of the fateful moment.

The Inference Imperative: Why Groq Changes Everything

The Groq deal must be understood in the context of a fundamental shift occurring in AI workloads. For the past several years, the industry's overwhelming focus has been on training, the computationally intensive process of teaching AI models to recognize patterns across massive datasets. This is the domain where Nvidia's general-purpose GPUs have been essentially unchallenged. But the economics of AI are changing rapidly. Late 2025 marked a tipping point: for the first time, inference, the process of deploying trained models to generate outputs in real-time, began to exceed training as the dominant compute workload. This shift has profound implications for the competitive landscape because inference workloads differ fundamentally from training workloads.

Running inference at scale needs low latency, high memory bandwidth, and energy efficiency—areas where Nvidia’s usual GPUs have limits. The key issue is how fast data moves. Inference has two parts: the prefill phase, which is compute-heavy, and the generation phase, which depends on memory bandwidth. If data can’t move quickly from memory to the processor, performance drops, no matter how powerful the chip is. Groq’s Language Processing Units use SRAM instead of the High Bandwidth Memory Nvidia uses, and they perform better in the generation phase, where GPUs often struggle. By adding Groq’s technology, Nvidia is showing that the era of one-size-fits-all GPUs is over and is preparing to lead in the new, more specialized inference market.

Circular Financing and the Ecosystem Strategy

Beyond licensing deals, Nvidia has deployed capital through a web of strategic investments that critics call "circular financing," but Huang describes as essential to expanding CUDA and the broader Nvidia ecosystem. The numbers are staggering: up to $100 billion committed to OpenAI over time (though no definitive agreement has been finalized, per CFO Colette Kress), $10 billion in Anthropic, $5 billion in Intel, $2 billion in Synopsys, $1 billion in Nokia, and substantial stakes in infrastructure players like CoreWeave. Nvidia participated in nearly 70 venture capital deals in 2025 alone, surpassing the 54 completed in all of 2024.

The strategic logic is multifaceted. Most directly, these investments help finance the AI buildout that drives demand for Nvidia's chips. When Nvidia invests in CoreWeave, which has purchased at least 250,000 Nvidia GPUs worth about $7.5 billion, the money flows in a circuit that returns to Nvidia's top line. But the benefits go beyond revenue cycling. Nvidia's equity stakes in companies like OpenAI and CoreWeave let these firms access debt financing at much better rates than they could on their own, transforming Nvidia from a component supplier into a financial guarantor of the AI infrastructure buildout. Startups that might otherwise face borrowing costs of 15% can instead access capital at rates comparable to those of Microsoft or Google, accelerating their expansion and appetite for Nvidia hardware.

The Intel investment deserves particular attention, given the historical irony. Twenty years after Intel's board rejected the opportunity to acquire Nvidia for $20 billion, Nvidia has invested $5 billion in Intel for a roughly four percent stake. Under the agreement, Intel will develop custom x86 CPUs for Nvidia's data centers and produce a system-on-chip (SoC) integrating Nvidia RTX GPU chiplets for next-generation personal computers. The would-be acquirer now receives capital from the company it once could have owned outright.

The Rubin Roadmap: Architecture as Moat

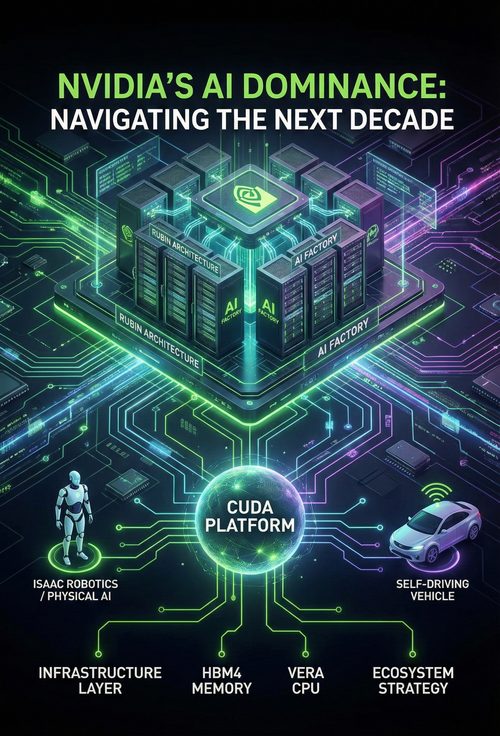

While financial engineering extends Nvidia's influence across the ecosystem, the core source of its competitive advantage remains its silicon roadmap. The upcoming Rubin architecture, scheduled for late 2026, marks a fundamental pivot in Nvidia's approach to AI infrastructure. Moving from individual accelerator chips to what Nvidia calls "AI Factories," Rubin is designed to treat an entire data center as a single, unified computer.

The Rubin R100 GPU’s specs are impressive. It uses TSMC’s advanced three-nanometer process and a multi-die design to get around the size limits of a single chip. Each Rubin unit will offer 50 petaflops of FP4-precision computing, about two and a half times faster than today’s Blackwell GPUs. It also includes HBM4 memory with 288 gigabytes of capacity and 13 terabytes per second of bandwidth, breaking through the “memory wall” that has held back large language model training. The new Vera CPU, built with custom Olympus cores instead of standard ARM designs, lets Nvidia combine CPU, GPU, and networking in a way that’s hard for others to match.

But perhaps more significant than the raw specifications is Nvidia's commitment to an annual release cadence. Moving from Blackwell in 2024 to Blackwell Ultra in 2025, Rubin in 2026, and Rubin Ultra in 2027, Nvidia is ensuring that customers must constantly refresh their infrastructure to maintain competitive performance, and that the performance gap with competitors remains perpetually out of reach. The Rubin CPX variant, purpose-built for million-token context processing, signals that future AI systems will need to reason across vast knowledge bases simultaneously, a need that Nvidia is positioning to address with specialized architectures.

Physical AI: The Next Frontier

Jensen Huang has stressed that the next stage of AI goes beyond language models to what he calls “physical AI,” systems that can understand concepts such as friction, inertia, cause and effect, and object permanence. At GTC 2025, Huang said, “the age of generalist robotics is here,” and introduced Isaac GR00T N1, an open-source model to speed up humanoid robot development. Nvidia is also working with General Motors on new autonomous vehicles. The company sees physical AI and robotics as a $50 trillion opportunity.

This is both a strategic opportunity and a defensive necessity. As inference workloads shift from cloud data centers to edge devices like phones, robots, IoT systems, and autonomous vehicles, the market segments multiply beyond what any single architecture can serve. Nvidia's investment in simulation technologies through the Omniverse platform and its Newton physics engine, developed with Google DeepMind and Disney Research, positions the company to deliver the synthetic data-generation capabilities physical AI systems need for training. By controlling both the hardware for inference and the simulation environments for training, Nvidia embeds itself throughout the robotics development value chain.

The CUDA Moat: Strength and Vulnerability

All of Nvidia’s strategies are really about protecting and growing its main advantage: CUDA. This software platform, built over almost 20 years, has more than 4 million developers, 3,000 optimized apps, and is deeply integrated with every major AI framework. This makes it very hard for competitors to break in. For companies that have spent billions on CUDA, switching to something else means not just technical changes but also retraining staff, moving apps, and ensuring everything works on new hardware.

Yet this moat faces its most serious challenges ever. Google's TorchTPU project, developed in collaboration with Meta, aims to enable seamless PyTorch execution on Google's proprietary TPU hardware, directly targeting the switching costs that have locked developers into CUDA. AMD's ROCm ecosystem, while still years behind CUDA in maturity, is making incremental progress with production adoption among leading AI developers. And the hyperscalers themselves—Google, Amazon, Microsoft—are investing billions in custom silicon precisely to reduce their dependency on Nvidia.

The inference market poses the greatest vulnerability, as CUDA's importance drops for inference workloads compared to training. When models are deployed rather than trained, the tight optimization CUDA provides becomes less critical, potentially opening the door for TPUs, AWS Trainium, and other alternatives to gain market share. Some analysts estimate TPU adoption could reach 35% of the inference market by late 2026, a scenario that would force Nvidia into painful pricing decisions and could compress the gross margins in its current valuation.

The Path Forward: Learning from the Fateful Moment

To stay on top, Nvidia has one main rule: never turn down a game-changing deal or fail to see what’s coming. This means investing in AI companies not just for profit, but to keep the whole ecosystem tied to Nvidia. It also means finding creative ways to acquire new technology and talent without drawing regulatory attention and ensuring potential rivals don’t get ahead. Nvidia needs to keep pushing its chip development so competitors can’t catch up, move into physical AI and robotics to meet new demand, and invest in software and services that make it even harder for customers to switch to other hardware.

The Groq deal, the OpenAI partnership, the Intel investment, the Rubin architecture, the Isaac robotics platform—these are not disparate initiatives but interconnected elements of a strategy to make Nvidia indispensable at every layer of the AI stack. Whether this strategy succeeds will determine not just the fate of one company but the structure of the technological foundation upon which artificial intelligence develops over the coming decades.

Somewhere in Intel's archives, there presumably exists documentation of that 2005 board meeting—the presentation Otellini made, the concerns board members raised, the vote that sent him away empty-handed. Twenty years later, that decision stands as perhaps the most consequential rejection in the history of technology. Jensen Huang has built perhaps the most impressive corporate position in modern business history. The challenge now is ensuring that no future historian writes a similar account of a fateful moment when Nvidia's leadership failed to see what the future required. The next several years will reveal whether such foresight, and the will to act on it, can convert dominance into permanence.