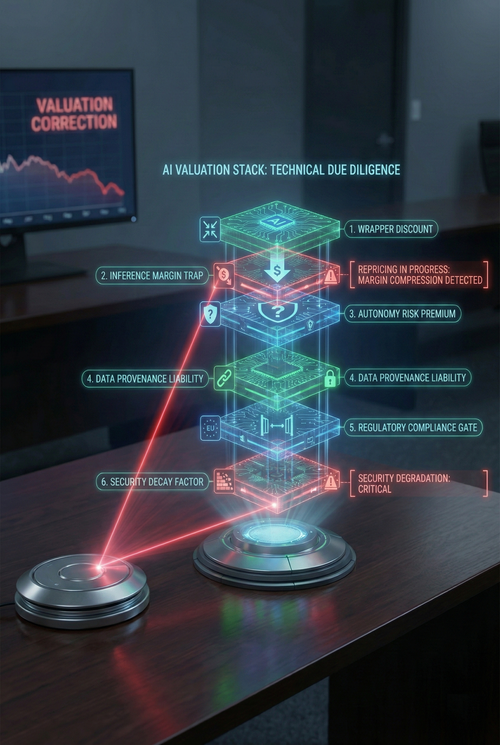

How Technical Due Diligence Actually Reprices Companies — From Wrapper Discounts to the Inference Margin Trap

The first post in this series argued that technical due diligence, as it existed for 20 years, is structurally broken. That was the diagnosis. This is the repricing.

If you accept that the audit has changed in kind, you must accept the consequence: companies are being mispriced. The valuation frameworks investors apply to AI companies still borrow heavily from the Software 1.0 playbook—recurring-revenue multiples, gross-margin benchmarks, customer-acquisition costs—as if the underlying economic structure were the same. It is not. AI companies have different cost structures, risk profiles, and relationships between scale and profitability. Until the audit catches up, capital will continue to flow to the wrong places, at the wrong prices, for the wrong reasons.

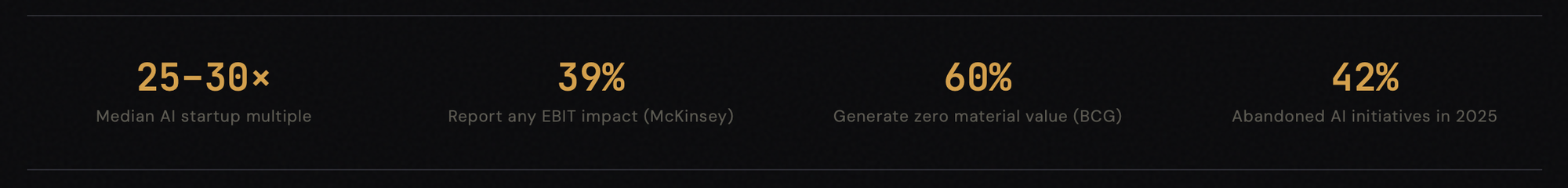

The numbers make the mispricing visible. AI startups command median revenue multiples of 25–30x, about four to five times those of public SaaS companies. Late-stage category-defining companies deliver 40–50x returns, with outliers exceeding 100x. These premiums are, in theory, justified by transformative potential. But the ground truth tells a different story. McKinsey's 2025 State of AI survey, covering about 2,000 organizations, found that while 88% now use AI in at least one function, only 39% report any impact on enterprise-wide EBIT, and most of those attribute less than 5% of EBIT to AI. BCG's research is blunter: 60% of companies generate no material value from their AI investments, and only 5% are creating substantial value at scale. Gartner predicts that over 40% of agentic AI projects will be cancelled by 2027, and the share of companies abandoning most AI initiatives jumped from 17% in 2024 to 42% in 2025. The question is not whether AI deserves a premium. The question is whether the current premium structure reflects the technical and economic realities that underpin it.

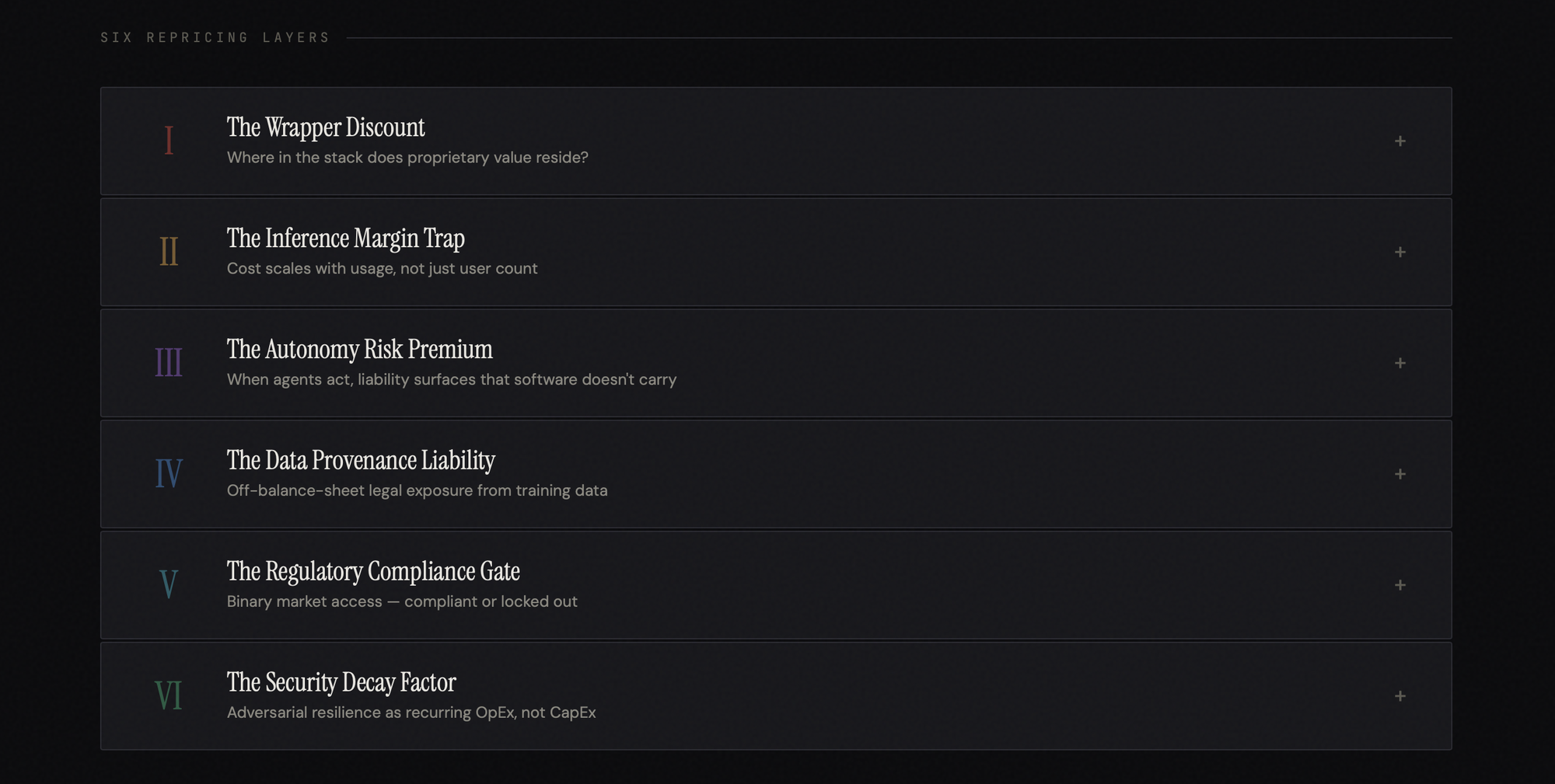

I believe it does not. The correction will be driven by six layers of technical due diligence, each mapping to a specific valuation consequence that most investors ignore.

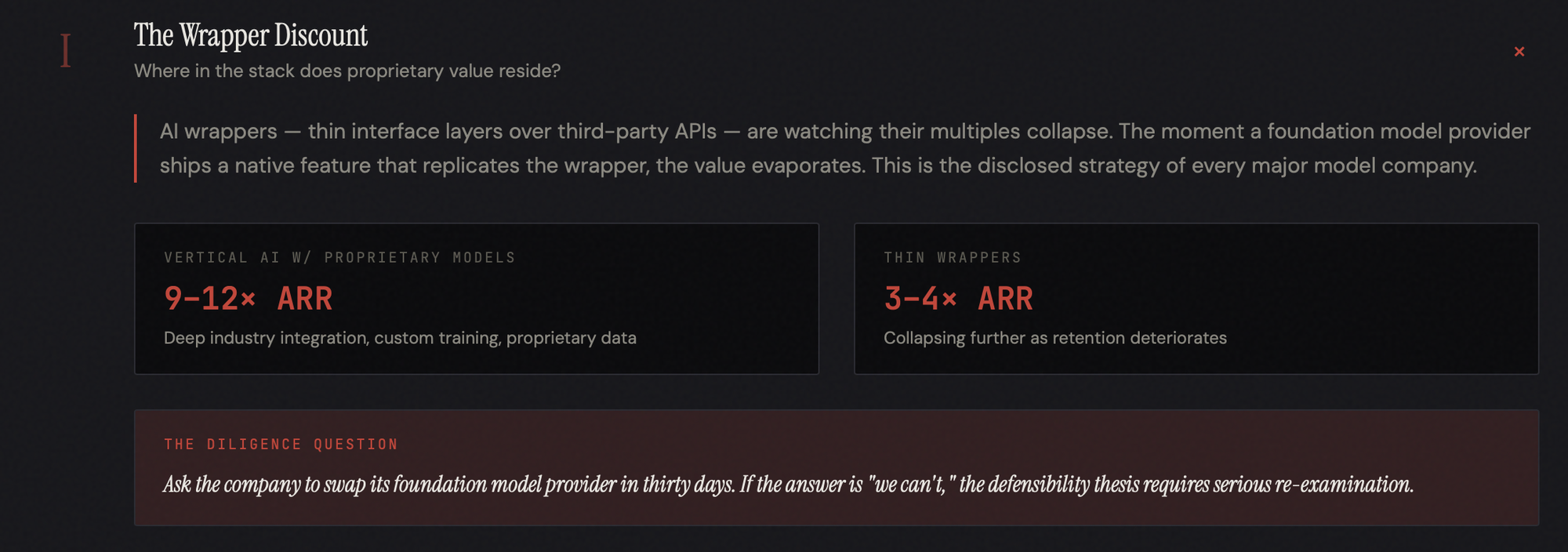

The Wrapper Discount

The first and most visible repricing is already underway. AI wrappers are thin interface layers on top of third-party foundation model APIs. They have no proprietary data, no custom training, and no meaningful retrieval architecture. As a result, their multiples are collapsing. The market is splitting quickly. Vertical AI companies with proprietary models that solve deep industry problems are trading at 9–12x ARR. Wrappers are struggling to hold 3–4x and, in many cases, are being marked down further as retention deteriorates.

The valuation logic is ruthless. A wrapper's competitive position depends entirely on the continued availability, pricing stability, and feature restraint of its upstream model provider. The moment OpenAI, Anthropic, or Google ships a native feature that replicates the wrapper's functionality, and they will, because they can see which API calls are most popular, the wrapper's value collapses. This is not theoretical. It is the disclosed strategy of every major foundation model company: expand the capability surface until the integration layer becomes redundant.

The due diligence question is precise: where in the stack does proprietary value reside, and what is the switching cost if the model layer changes? Companies with proprietary RAG pipelines, custom fine-tuned models on unique datasets, or novel architectures that create performance advantages independent of any single provider can defend their position. Companies whose primary asset is a prompt template cannot. The valuation gap, currently a spread of about 3x to 5x in revenue multiple terms, is, if anything, too narrow.

For the investor, the operational test is straightforward: ask the company to switch its foundation model provider within 30 days. If the answer is "we can't," the defensibility thesis requires serious re-examination.

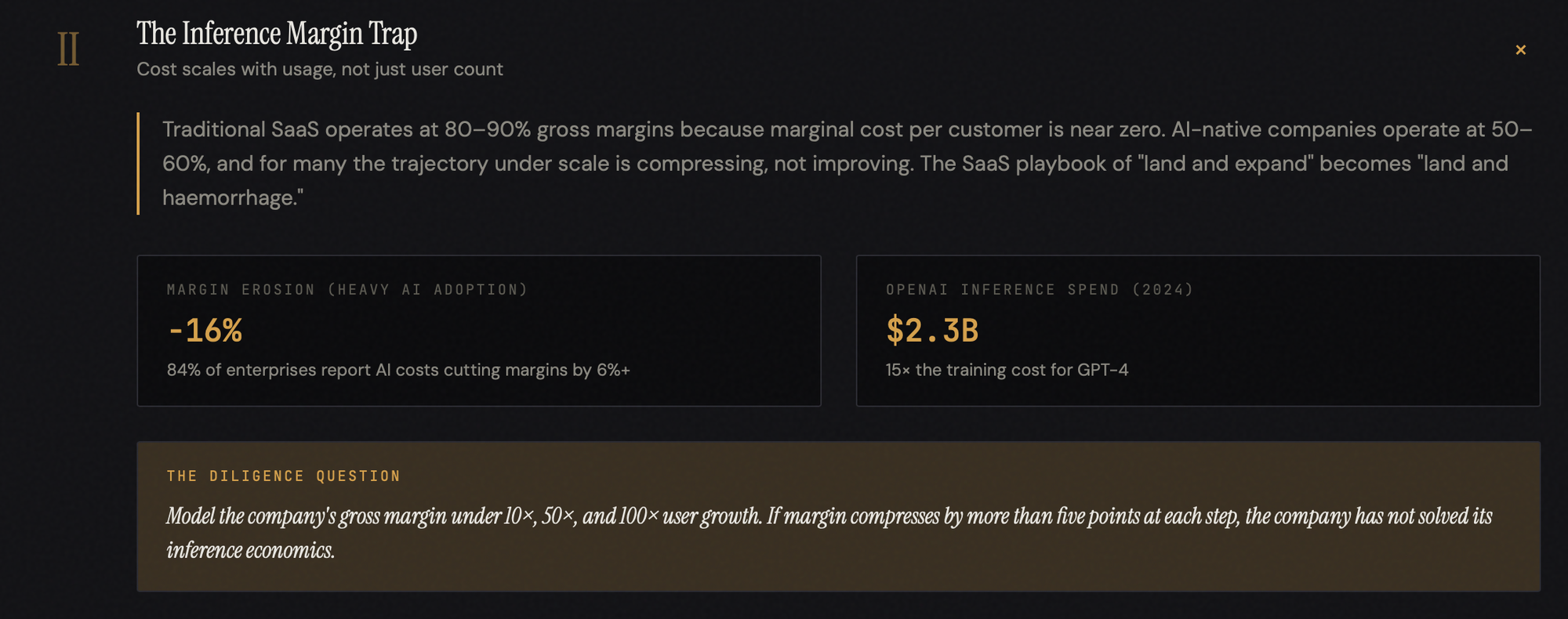

The Inference Margin Trap

The second repricing is more subtle and more consequential because it challenges the financial assumption most SaaS investors treat as axiomatic: that software businesses have high gross margins that improve with scale.

They do not — not in AI.

Traditional SaaS companies operate with gross margins of 80% to 90% because the marginal cost of serving an additional customer is near zero. AI-native companies operate at 50% to 60%, and for many, the trajectory under scale is compressing rather than improving. 84% of enterprises report AI infrastructure costs eroding gross margins by 6% or more. For companies where more than half of customers use AI features, that erosion reaches 16%. On a $200 million revenue base, that is $32 million in lost EBITDA.

The structural reason is inference. Every query, every agent action, every model invocation consumes compute: GPU hours, memory bandwidth, energy. Unlike traditional hosting, costs scale not just with user count but also with query complexity. An agentic workflow that chains multiple reasoning steps can consume 10 to 100 times as many tokens as a simple completion. OpenAI's inference spend reached $2.3 billion in 2024, fifteen times the training cost for GPT-4. When the cost of goods sold depends on how much customers use your product, the SaaS playbook of "land and expand" becomes "land and haemorrhage" unless unit economics are carefully governed.

The companies solving this do so through architectural discipline: routing simple queries to smaller, cheaper models; quantising to reduce the memory footprint; caching frequent responses; and distilling large models into purpose-built variants. Cursor built a proprietary mixture-of-experts model that runs four times faster than comparable frontier models and crossed $1 billion in annualised revenue, with projected gross margins improving from 74% to 85% by 2027. That is the template. But most companies have not built this infrastructure, and most investors are not asking.

The audit question: model the company's gross margin for user growth at 10x, 50x, and 100x. If the margin compresses by more than five points at each step, the company has not solved its inference economics, and the revenue multiple it commands is borrowing from a future that may never arrive.

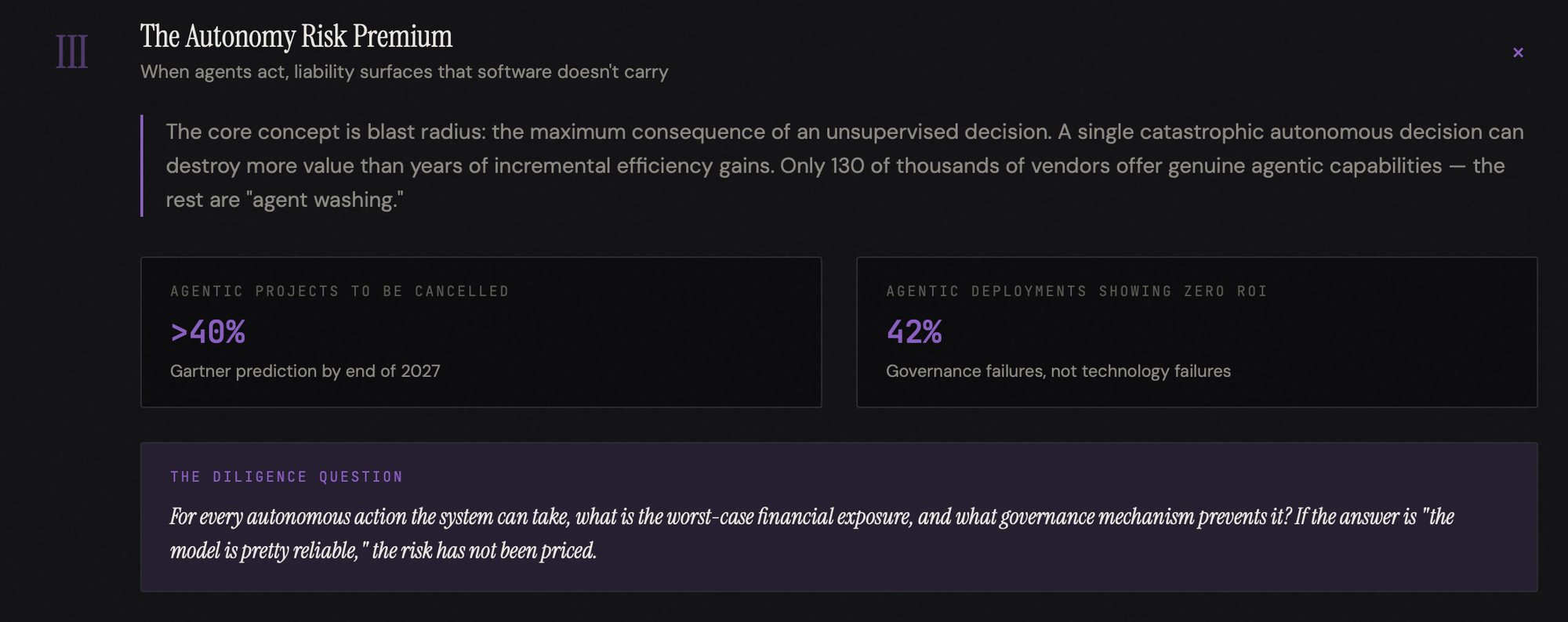

The Autonomy Risk Premium

The third repricing targets the fastest-growing and most poorly understood category: agentic AI. When agents act, not merely suggest, they introduce a liability surface that traditional software does not.

The core concept is blast radius: the maximum potential consequence of an unsupervised decision. A customer service agent with a five-dollar refund ceiling has a contained blast radius. A financial agent executing portfolio rebalancing, a procurement agent authorising purchase orders, and a healthcare agent adjusting treatment protocols carry tail risks that can exceed the cumulative value the agent has ever generated. A single catastrophic autonomous decision can destroy more value than years of incremental efficiency gains.

Gartner's prediction that 40% of agentic projects will be cancelled is not primarily a technology story. It is a governance story. Only 130 of the thousands of vendors claiming agentic capabilities offer genuine autonomous functionality; the rest are engaged in "agent washing," rebranding chatbots and RPA. Among legitimate deployments, 42% show zero measurable ROI, largely because enterprises measure agents against narrow cost-savings metrics while ignoring the governance infrastructure required to run them safely.

The valuation consequence is a risk-adjusted multiple. A company with robust escalation frameworks, human-in-the-loop oversight for high-stakes decisions, compositional monitoring across every agent subsystem, and a demonstrably bounded blast radius should command a premium. A company shipping autonomous agents without these safeguards is carrying undisclosed contingent liability.

The diligence question: for every autonomous action the system can take, what is the worst-case financial exposure, and what governance mechanism prevents it? If the answer is "the model is pretty reliable," the risk has not been priced.

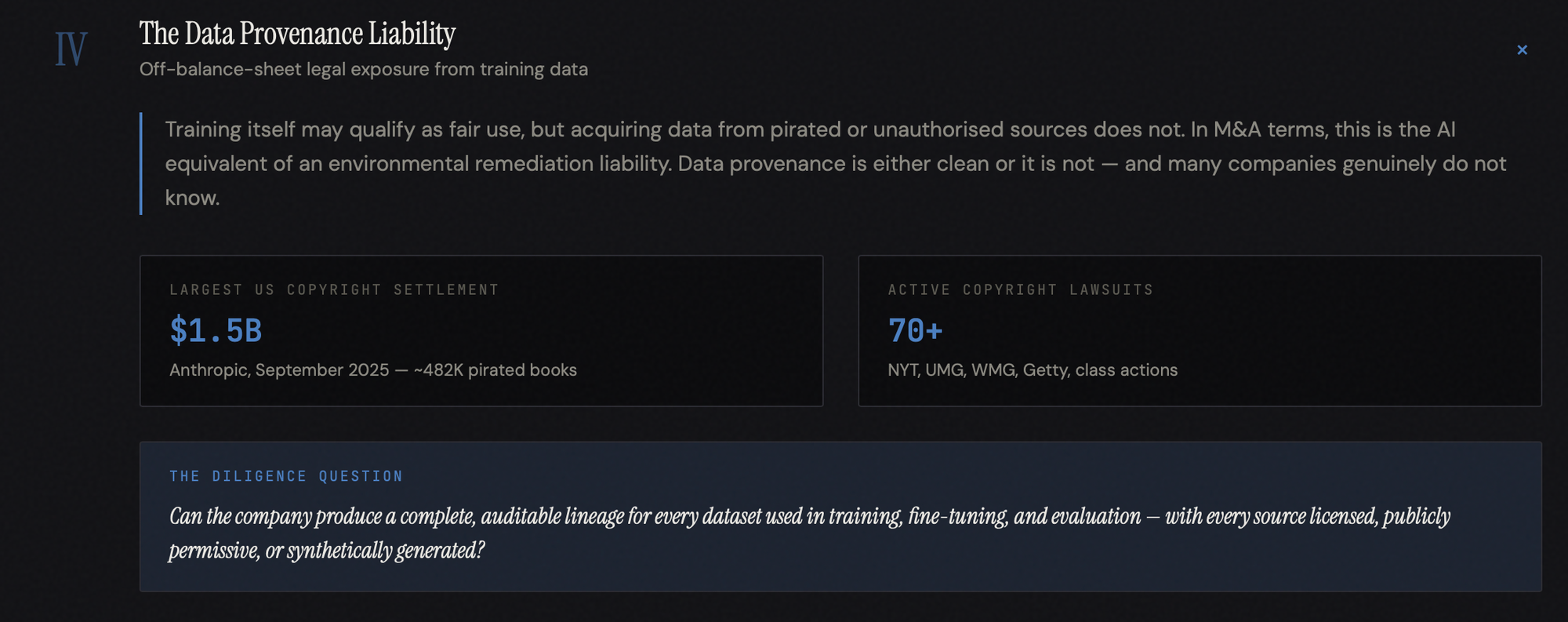

The Data Provenance Liability

The fourth repricing introduces something that most technology valuations have never had to account for: off-balance-sheet legal exposure from training data.

The landmark is concrete. In September 2025, Anthropic paid $1.5 billion — the largest copyright settlement in United States history — to resolve claims over approximately 482,000 pirated books downloaded from shadow libraries. Over seventy active copyright infringement lawsuits now target AI companies in US courts, involving The New York Times, Universal Music Group, Warner Music Group, Getty Images, and a growing wave of class actions. Courts have found that training itself may qualify as fair use, but acquiring data from pirated or unauthorised sources does not — and the financial exposure for companies on the wrong side of that line is measured in billions.

This matters for valuation because data provenance is either clean or it is not, and many companies do not know whether it is. The lineage was never tracked with the rigour required for a legal challenge. In M&A terms, this is the AI equivalent of an environmental remediation liability in an industrial acquisition.

The case studies confirm the impact. A B2B AI SaaS company with robust IP documentation and exclusive data rights achieved a $112 million exit at 28x ARR. A comparable company with unclear data rights faced a 25% valuation discount despite strong revenue growth. The delta is not a function of technology quality. It is a function of legal hygiene.

The diligence question: can the company produce a complete, auditable lineage for every dataset used for training, fine-tuning, and evaluation, with each source licensed, publicly available, or synthetically generated, and with documented methodology? If the answer is incomplete, the acquirer is buying undisclosed legal exposure.

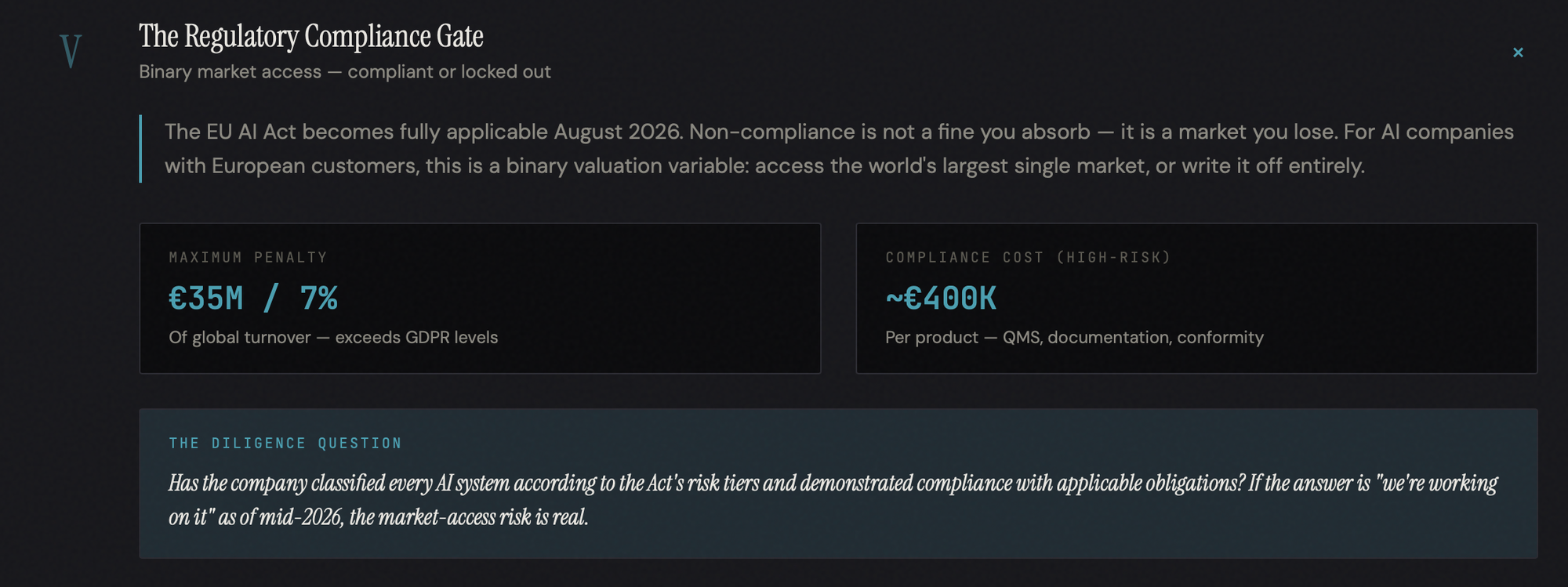

The Regulatory Compliance Gate

The fifth repricing is binary. The EU AI Act becomes fully applicable for most operators on 2 August 2026. Non-compliance carries fines of up to €35 million or 7% of global annual turnover — exceeding even GDPR penalty levels. High-risk AI systems — anything used in employment decisions, credit scoring, law enforcement, critical infrastructure, or healthcare — must maintain comprehensive technical documentation, implement risk management systems, ensure data governance, provide human oversight, and undergo conformity assessments.

For AI companies with European customers, compliance is a market-access requirement, not an option. A company that has invested in compliance infrastructure can access the world's largest single market without interruption. A company that has not is either facing significant capital expenditure or writing off the European market. Both outcomes affect the valuation.

The diligence question: has the company classified every AI system in accordance with the Act's risk tiers and demonstrated compliance with applicable obligations? If the answer is "we're working on it" as of mid-2026, the market-access risk is real.

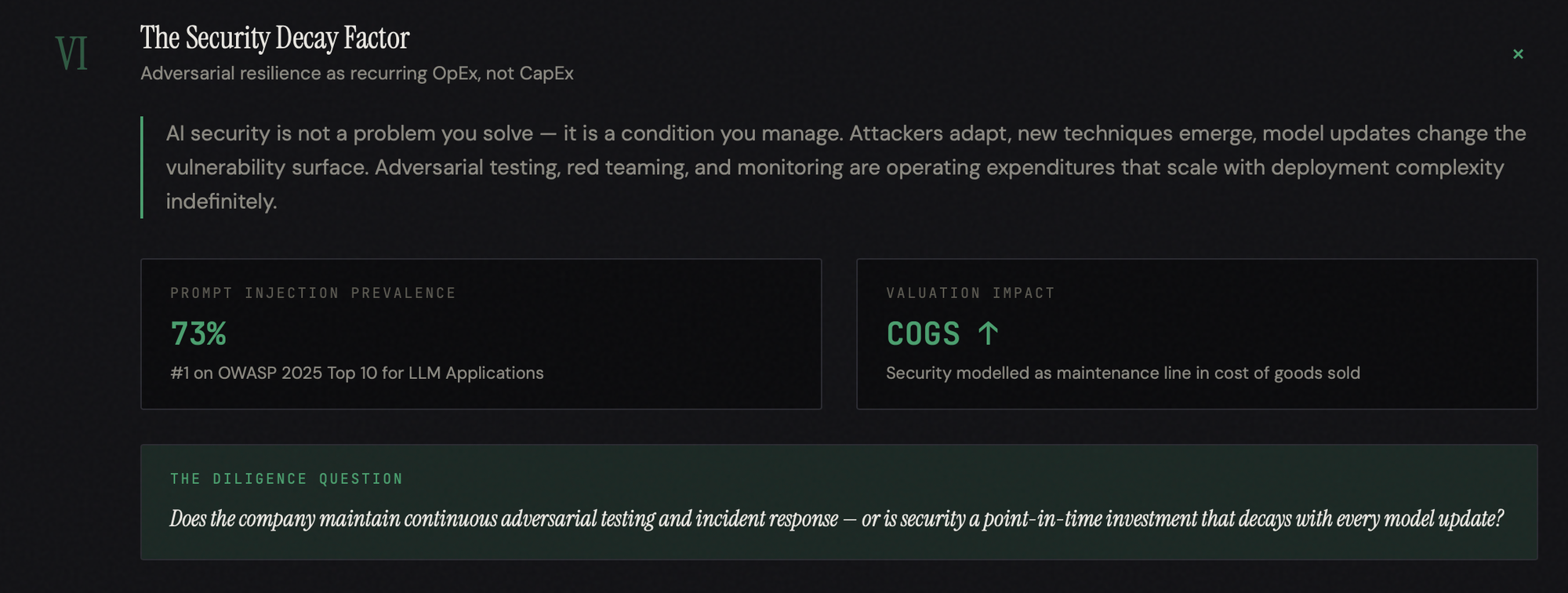

The Security Decay Factor

The sixth repricing reframes adversarial resilience as a recurring cost rather than a one-time investment. Prompt injection ranks number one on OWASP's 2025 Top 10 for LLM Applications, appearing in 73% of production deployments. But the deeper issue is that AI security is not a problem you solve — it is a condition you manage. Attackers adapt. New injection techniques emerge. Model updates change the vulnerability surface. Adversarial testing, red teaming, input filtering, output monitoring, and model hardening are not capital expenditures with a defined end date. They are operating expenditures that scale indefinitely with deployment complexity.

For valuation purposes, this means security maturity should be modelled as a maintenance line item in the cost of goods sold. A company with continuous adversarial testing and incident response protocols carries a known, manageable cost. A company without is carrying hidden technical debt that will materialise as a breach, a compliance failure, or a forced investment in remediation at scale.

The Convergence: From Six Layers to One Number

These six layers do not operate independently. They interact.

A company might have premium architecture, proprietary models, clean data, strong defensibility, but terrible inference economics that consume the technology premium at the margin. Another might have excellent unit economics but a data-provenance liability that surfaces in litigation 18 months post-close. A third might have robust governance but no pathway to EU market access, constraining its addressable market at the moment, as buyers are paying for global scale.

The AI Valuation Stack is a structured method for netting these factors against one another, moving from a single, indiscriminately applied revenue multiple to a risk-adjusted valuation that reflects the underlying technical, economic, legal, and regulatory realities. The companies commanding the highest multiples will not be those with the most impressive demos. They will be those that survive scrutiny across all six layers simultaneously: defensible architecture, governed inference economics, bounded agent risk, clean data provenance, regulatory readiness, and continuous security discipline.

The wrapper discount, the inference margin trap, the autonomy risk premium, the data provenance liability, the regulatory compliance gate, and the security decay factor are not abstractions. They are repricing mechanisms, operating now, in every term sheet and deal committee where the question is no longer "Is this company using AI?" but "Is this company's AI worth what we're being asked to pay?"

The investors who adopt this framework first will be the ones who avoid paying 30x revenue for a company that, under proper examination, is worth 8.