There is a moment in every technological transition when the metaphors we use to understand the change become the very thing that blinds us to its magnitude. We called automobiles "horseless carriages." We called the internet "the information superhighway." And today, we call autonomous AI systems "tools."

This is the mistake that will define which organizations thrive in the next decade and which become footnotes in business school case studies.

The current development is not merely the introduction of more advanced software. Rather, it marks the emergence of a new category of organizational participant—one that perceives, reasons, acts, and learns through functional execution rather than mere simulation. Organizations that recognize this distinction early will not only outperform competitors but will also operate within a fundamentally different strategic context.

The Scattered Tools Trap

There is an approach to AI strategy that sounds reasonable in boardrooms and strategy sessions. Start small. Run pilots across departments. Let innovation bloom organically. Scale what succeeds. This has the comforting appearance of prudence—spreading risk, maintaining flexibility, avoiding premature commitment.

However, this approach increasingly leads to irrelevance.

When organizations attempt to "democratize" AI across the enterprise, individual departments receive specialized tools: marketing adopts content-generation systems, sales uses lead-scoring platforms, customer service implements chatbots, and engineering employs code-completion assistants. While each investment is justifiable and demonstrates isolated efficiency gains, the organization as a whole remains fundamentally unchanged.

Each tool operates in isolation, powered by generic models and generating outputs that require manual integration into existing workflows. Furthermore, each tool demands adoption effort and workflow modification, yet none provides sufficient value to warrant a fundamental reorganization of work processes. As a result, the organization's competitive position remains static, with competitors achieving similar marginal gains through identical tools. Under economic pressure, these fragmented investments are typically the first to be eliminated.

The uncomfortable truth emerging from the first wave of enterprise AI deployment is that marginal improvements scattered across many domains do not compound into competitive advantage. They compound into complexity, cost, and organizational fatigue. Shallow deployments fail not because the technology is inadequate, but because they never generate the feedback loops that allow AI to improve. A chatbot collects transcripts, but if those transcripts are not connected to resolution outcomes and organizational knowledge, the data is noise. The system optimizes its local function, but the organization as a whole does not learn.

This is the trap: the appearance of progress without the substance of transformation.

The Illusion of the Intelligent Tool

The scattered-tools approach persists because it rests on a flawed mental model: the assumption that AI is fundamentally a tool, and that tools can be deployed incrementally wherever they add value.

For the past three years, the dominant narrative around artificial intelligence has centered on generation—the exceptional ability of large language models to produce text, code, and images from simple prompts. This capability, while genuinely remarkable, has conditioned us to think of AI as an oracle. You ask a question; it provides an answer. The relationship is fundamentally passive. The system waits for you.

But consider what happens when we remove that passivity. When we give a system not just the ability to respond, but the capacity to pursue objectives. When we connect it to the actual systems of record that run your business: inventory databases, customer relationship platforms, and financial ledgers. When we give it memory that persists across interactions, it can learn from its successes and failures over time.

The result is no longer a tool in the traditional sense, but rather an operator within the organization.

This architectural shift underpins what researchers refer to as the "Agentic Enterprise." The transition is not merely from less advanced to more advanced AI, but from systems that generate to those that execute; from probability engines that guess to reasoning engines that verify; and from passive assistants to active colleagues.

The implications of this shift challenge foundational assumptions regarding organizational function.

The Architecture of Autonomy

To understand why this matters, we must briefly examine what makes an autonomous system actually autonomous, not in marketing language but in technical reality.

A classical large language model, for all its sophistication, is fundamentally stateless. Each interaction exists in isolation. The system has no persistent understanding of who you are, what you have discussed before, or what happened as a result of its previous suggestions. It cannot check whether its recommendation worked. It cannot learn from the outcome. It generates a response based on statistical likelihood and then, in a real sense, forgets.

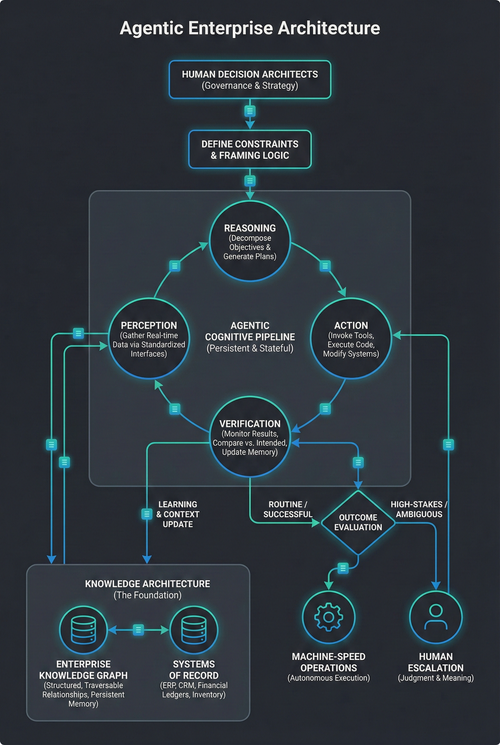

An agentic system operates entirely through a distinct cognitive pipeline. It begins with perception—gathering real-time data from your enterprise systems through standardized interfaces. It then engages in reasoning, decomposing complex objectives into executable sub-tasks and generating plans before taking action. It acts, invoking external tools, writing and executing code, and making changes to actual systems. And critically, it verifies—monitoring the results of its actions, comparing them against intended outcomes, and updating its approach based on what it learns.

This represents a categorical difference rather than a mere incremental enhancement.

The technical infrastructure to support this kind of autonomy is substantial. The system needs memory beyond simple document retrieval. Traditional approaches that search for similar-sounding information fail when the task requires connecting facts across multiple logical steps. If an agent needs to understand that a supply disruption at Supplier X will affect Production Line Y, which feeds Customer Z's priority order, it cannot rely solely on semantic similarity. It needs the relationships to be explicit and traversable.

This is why forward-thinking organizations are investing in knowledge architectures that map entities and their relationships as structured graphs. When an agent queries this kind of memory, it does not guess at connections—it follows them deterministically. The difference in reliability is not marginal; it is the difference between a system you can trust with consequential decisions and one you cannot.

The Concentrated Bet

If the scattered-tools approach constitutes a strategic trap, what alternative should organizations pursue?

The solution is not to select a single business domain—such as underwriting over fraud detection, or marketing over operations—and deploy AI exclusively within that area. While this logic may apply to traditional workflow automation, agentic AI is architecturally distinct. Its value proposition is inherently cross-functional.

A knowledge graph restricted to a single department undermines its intended purpose. The true value lies in traversing relationships across the entire organization, such as linking customers, contracts, suppliers, and competitors. Agent-to-agent protocols are designed to facilitate collaboration across organizational silos, enabling, for example, a sales agent to coordinate with a logistics agent and confirm task completion. Governance frameworks must therefore encompass the entire enterprise, as agents will inevitably operate across departmental boundaries.

The strategic focus should not be on individual domains, but rather on the underlying infrastructure.

This means investing in the semantic layer that maps your organizational knowledge. It means building connectivity protocols that enable agents to discover and collaborate. It means designing governance frameworks that define when humans intervene, when agents escalate, and how authority flows between human and artificial participants. It means establishing measurement systems that track not just outputs but also the health of your decision environment.

This infrastructure serves as a strategic moat. While competitors may replicate a chatbot within weeks, they cannot easily duplicate an enterprise-wide knowledge architecture, a mature governance framework, or a workforce adept at collaborating with autonomous systems.

The strategic question is not "where should we deploy AI?" but "are we building the infrastructure that allows intelligence to compound across the organization?"

The Collapse of a Boundary

Perhaps the most profound implication of this shift is what it does to a distinction we have taken for granted since the dawn of management theory: the distinction between tools and workers.

Tools are owned. They are predictable. They depreciate on a schedule. Workers are managed. They are adaptive. They appreciate through experience and training. Our entire organizational infrastructure, from accounting standards to human resources practices to legal frameworks, is built on this binary.

Agentic AI collapses it.

These systems are owned like assets but behave like workers. They require supervision like employees, but can be deployed like software. They depreciate through model drift but appreciate through fine-tuning and accumulated experience. They demand both human resource approaches and asset management techniques, simultaneously.

Recent global research indicates that seventy-six percent of executives perceive agentic AI as more akin to a coworker than a tool. This perspective reflects operational reality rather than sentiment. When a system can plan, execute, and adapt—pursuing complex goals without continuous human intervention—traditional categories become obsolete.

The organizational response to this collapse cannot be incremental. You cannot manage a new category of participant with old categories of thought. What is required is a fundamental reimagining of how authority, accountability, and decision-making flow through the enterprise.

Decision Architecture Framework

Moving accountability upstream: defining the logical environment in which autonomous agents operate

Governance & Strategy

Knowledge Layer

Semantic Mapping

Before an agent can act, it must have a reliable map of the organization.

Structured Graphs

Replace simple document retrieval with knowledge graphs that map entities (customers, contracts, suppliers) and their traversable relationships.

Contextual Memory

Ensure the system has persistent memory of previous interactions and outcomes to allow for learning through functional execution.

Logic Layer

Constraints & Objectives

This layer defines the "framing logic" that guides agent behavior without constant human intervention.

Objective Definition

Clearly state the high-level goals (e.g., "optimize procurement for speed, then cost").

Constraint Discovery

Define the boundaries (legal, ethical, and budgetary) within which the agent is permitted to reason and act.

Reasoning-before-Action

Force a "cognitive pipeline" where the agent must decompose tasks and generate a plan before executing code or modifying systems.

Governance Layer

The Flow of Authority

This defines the interface between human judgment and machine speed.

Escalation Protocols

Establish explicit triggers for when an agent must route a high-stakes decision back to a human "decision-architect".

Intervention Rights

Define the conditions under which a human supervisor overrides agent actions.

Agent-to-Agent Protocols

Standardize how agents from different silos discover each other and collaborate on cross-functional tasks.

Verification Layer

Audit & Feedback

The system must verify its own results to enable compounding intelligence.

Reasoning Audit Trails

Capture the logical steps and data points the agent used to arrive at a specific action, not just the outcome itself.

Outcome Monitoring

Implement real-time monitoring to compare actual results against intended objectives, updating the system's approach based on successes or failures.

Decision Environment Health

Track metrics that measure the health and reliability of the overall decision-making environment rather than just isolated task efficiency.

A Living System, Not a Waterfall

These layers are not sequential stages but concurrent systems. The Knowledge Layer feeds the Logic Layer, which constrains the Governance Layer, which triggers the Verification Layer—but verification updates knowledge, governance refines logic, and the cycle continues. The architecture is a living system that enables compounding intelligence.

The Agentic Cognitive Pipeline

Machine-Speed Operations

Autonomous Execution

Routine, well-defined tasks proceed at machine speed without human intervention. The system handles high-volume, low-risk decisions autonomously.

Human Escalation

Judgment & Meaning

High-stakes, ambiguous, or novel situations route to human decision-architects. Humans provide judgment, ethical reasoning, and strategic direction.

The Question of Authority

If an autonomous system is capable of making decisions, the question arises: who holds responsibility for those decisions?

While the instinctive response is that humans remain responsible for all outcomes, this answer is insufficient. The more critical question concerns who designs the systems that frame available choices.

This is the shift from what we might call traditional decision rights to a new paradigm. In the old model, we ask: "Who made this decision?" In the agentic model, we must ask: "Who designed the architecture that generated the options? Who defined the constraints? Who trained the framing logic?"

Consider a scenario where an autonomous procurement agent negotiates a supplier contract that later proves problematic. The agent did not act randomly; it acted within a framework of objectives, constraints, and logic defined by humans. Accountability does not vanish—it shifts upstream to the architecture itself.

This requires executives to think of themselves less as decision-makers and more as decision-architects. The strategic task is no longer to make the right choices, but to design environments in which good choices become systematically more likely. This is a profound cognitive shift for leaders trained to value judgment and intuition above all else.

Organizations need explicit protocols for when human intervention is required, when autonomous action is permitted, and how authority flows between human and artificial participants. They need audit trails that capture not just outcomes but reasoning—the logical steps an agent took to arrive at its action. They need escalation paths that route high-stakes decisions to human judgment while allowing routine operations to proceed at machine speed.

Currently, most organizations lack this essential infrastructure. Developing it constitutes the central leadership challenge for the next five years.

Infrastructure Milestones & Success Narratives

Sustaining commitment through the valley: concrete checkpoints and stories to communicate progress during the first 18 months of agentic transformation

Infrastructure Milestones

Celebrate these checkpoints before ROI appears—they prove the foundation is being built

Phase 1: Foundation

Months 1–6Establishing the architectural groundwork. Success in this phase means the organization has a clear map of what it knows, what rules govern action, and how authority flows.

Data Inventory Complete

Target: 100% of critical systems catalogued with entity definitions

Graph Schema Defined

Target: Core entity types and relationships documented

Objective Taxonomy Created

Target: Priority hierarchies for 3+ business domains

Constraint Boundaries Documented

Target: Legal, ethical, and budgetary limits codified

Escalation Protocols Drafted

Target: Decision thresholds defined for human routing

Audit Trail Architecture Designed

Target: Reasoning capture schema approved

Phase 2: Integration

Months 7–12Connecting systems and activating agents. Success means live data flows through the knowledge graph, agents operate within defined constraints, and escalation paths function.

Systems of Record Connected

Target: 80%+ of critical data sources feeding graph

Graph Populated with Live Data

Target: 10,000+ entity relationships traversable

First Agents Operational

Target: 3+ agents executing within constraints

Escalation Paths Functioning

Target: 95%+ high-stakes decisions routed correctly

Agent-to-Agent Protocols Tested

Target: Cross-functional handoffs successful

Real-time Monitoring Operational

Target: Dashboard tracking agent outcomes live

Phase 3: Optimization

Months 13–18Refining based on operational learning. Success means the system learns from its own performance, human-agent collaboration patterns stabilize, and the environment health is measurable.

Cross-functional Traversal Active

Target: Multi-hop queries across 3+ domains

Constraints Refined by Learning

Target: 2+ constraint updates from operational data

Human-Agent Patterns Stabilized

Target: Intervention rate below 15%

Environment Health Metrics Live

Target: Decision quality score tracked weekly

Feedback Loop Operational

Target: Outcomes updating agent behavior

Contextual Memory Persisting

Target: Agents recalling prior interactions

Success Narratives

Stories that sustain belief when dashboards still show red—share these to demonstrate transformation

The Analyst Who Solved It in Minutes

"A regional compliance analyst received a complex audit query that would normally require pulling data from four systems and cross-referencing three policy documents. The knowledge graph traversed the relationships in seconds. What used to take two hours took ten minutes—and the analyst spent that time reviewing the answer, not hunting for it."

When Sales Met Logistics—Autonomously

"A sales agent identified a high-value customer order that required expedited shipping. Instead of generating a ticket and waiting, it discovered the logistics agent via protocol, negotiated priority allocation, and confirmed delivery timing—all before the sales rep finished her coffee. The customer received a commitment in minutes, not days."

The Contract Clause That Almost Wasn't

"During contract review, the procurement agent traversed the knowledge graph and discovered that a supplier's proposed terms conflicted with an exclusivity clause in an existing agreement with a competitor. A human reviewer had missed it in three prior passes. The agent flagged it, cited the specific clause, and prevented a potential $2M liability."

The Decision That Required a Human

"A customer service agent encountered an unusual refund request: a long-standing customer, a product failure, but circumstances that fell outside standard policy. Instead of applying rules mechanically or hallucinating an answer, the agent packaged the context, cited the relevant policy gaps, and escalated to a human decision-architect—who approved an exception that preserved a $500K annual relationship."

Executive Communication Template

Use this structure for quarterly updates during the transformation

Milestone Progress Tracker

Sustaining Commitment Through the Valley

The most challenging period in any deep transformation is the middle, roughly months six through eighteen, when costs are tangible, but benefits are not yet visible. Infrastructure investments do not show ROI on quarterly dashboards. Adoption curves take time to climb. The scattered-tools approach is appealing because it avoids this test of conviction. You are never wrong because you never fully commit. You are also never right because you never concentrate enough to find out.

Sustaining commitment during this challenging period requires implementing several essential practices.

First, celebrate infrastructure milestones, not just outcomes. Treat completing your knowledge architecture or reaching eighty percent protocol coverage as wins worth recognizing. If you celebrate only ROI, you will lose momentum during the build phase.

Second, share stories, not just scorecards. Concrete examples of how the new system changes daily work sustain belief when dashboards still show red. An analyst solves a complex problem in ten minutes instead of two hours. A manager approves a complicated case without calling three supervisors. These narratives carry organizations through uncertainty.

Third, set explicit criteria for what "not working" means before you start. Define specific metrics and timelines that would trigger a strategic pivot. This gives you permission to exit without the sunk cost fallacy taking over. Paradoxically, it creates more commitment because the team knows the bet is bounded.

Fourth, maintain visible executive commitment. The CEO or business unit leader should reference this initiative in every quarterly address. Sustained executive attention signals this is not a side project vulnerable to the next budget cycle.

Pivot Logic Framework

Knowing when to persist and when to pivot during the transformation valley

The Transformation Timeline

What to Measure at Each Phase

Are you hitting milestones for data integration, system connectivity, and foundational capabilities?

What percentage of target users are actively using the system weekly? Is usage increasing?

Now traditional measures should move: downtime reduction, approval time, inventory turns, conversion rates.

Are you pulling away from competitors? Can you offer service levels at price points they cannot match?

Pre-Defined Decision Criteria

Signals to Persist

Continue the current trajectory

Infrastructure milestones are being hit on schedule (±20%)

Adoption velocity is increasing month-over-month

Early users report qualitative improvements in decision quality

Technical foundation is proving reusable across use cases

Competitors are struggling to replicate your infrastructure

Signals to Pivot

Redirect resources to a different approach

Adoption remains below 60% after training and iteration

Target outcome hasn't improved by threshold (e.g., 15%) within defined timeline (e.g., 18 months)

Competitors have succeeded in a different domain with clearer results

Data remediation requirements have expanded beyond original scope

Regulatory or market shifts have invalidated the value hypothesis

The 18-Month Decision Point

Structuring the Bet to Limit Risk

Data unification, API layers, and measurement systems have value regardless of which AI applications succeed. Build foundations that serve multiple futures.

Define "not working" before you start. Specific metrics and timelines give you permission to exit without sunk cost fallacy taking over.

Don't fund three years upfront. Fund infrastructure build (6-12mo), then deployment (6mo), then scaling (12+mo). Each stage must demonstrate progress.

Track competitors. If they succeed in a different domain, that's data. Infrastructure you've built may be 60%+ reusable for the pivot.

Sustaining Commitment Through the Valley

Celebrate Infrastructure Milestones

Treat completing your knowledge architecture or reaching 80% protocol coverage as wins worth recognizing. If you only celebrate ROI, you'll lose momentum during the build phase.

Share Stories, Not Just Scorecards

Concrete examples of how the new system changes daily work sustain belief when dashboards still show red. An analyst solving a problem in 10 minutes instead of 2 hours.

Define Exit Criteria Upfront

Specific metrics and timelines that would trigger a pivot give you permission to exit without sunk cost fallacy. Paradoxically, bounded bets create more commitment.

Visible Executive Commitment

The CEO or BU leader should reference this initiative in every quarterly address. Sustained executive attention signals this is not a side project vulnerable to the next budget cycle.

The Human Enterprise

There is a temptation, when confronting technological change of this magnitude, to speak of inevitability, to suggest that the future is already determined and our only choice is how quickly we adapt.

I want to resist that framing.

The agentic enterprise is not something that will happen to us. It is something we will build. The choices we make about how to design these systems, what values to encode within them, and how to distribute authority between human and artificial participants are not technical details. They are moral and strategic decisions that will shape the character of our organizations for decades to come.

The firms that thrive will not be those that simply deploy the most autonomous systems. They will be those that most thoughtfully integrate autonomous capability with human purpose. They will understand that the goal is not to remove humans from the loop, but to elevate humans to the work that only humans can do: the work of meaning, judgment, and care.

The scattered-tools approach fails not just strategically but philosophically. It treats AI as a set of features to be sprinkled across the organization. The concentrated infrastructure approach succeeds because it treats AI as a new medium for organizational intelligence, one that requires deliberate architecture, explicit governance, and sustained commitment.

The stateless oracle asked you questions and gave you answers. The agentic enterprise asks you something far more profound: What do you actually want? What do you truly value? What kind of organization do you want to become?

The technology is ready for your answer.

The question is whether you are ready to give it.