Over the last year or so, the most important question in enterprise AI has been deceptively simple: How do we build agentic systems? Underneath this question, however, another one has been forming – quieter, more uncomfortable, and far more consequential: Who is responsible when they act incorrectly?

The moment an AI system is permitted to execute an API call, write to a database, or commit a transaction, it ceases to be a passive tool and becomes an organizational actor. And actors, whether human or machine, require clearly defined authority, bounded failure modes, and unambiguous accountability.

The first phase of enterprise AI has been focused on structure, encompassing models, data, orchestration, and scale. The next phase is about sovereignty. Most organizations are attempting to scale autonomy without answering three foundational questions. What is an AI agent actually allowed to do? How does the organization recover when, not if, it acts incorrectly? And which human ultimately owns the outcome of that action? These are not governance afterthoughts. They are architectural requirements.

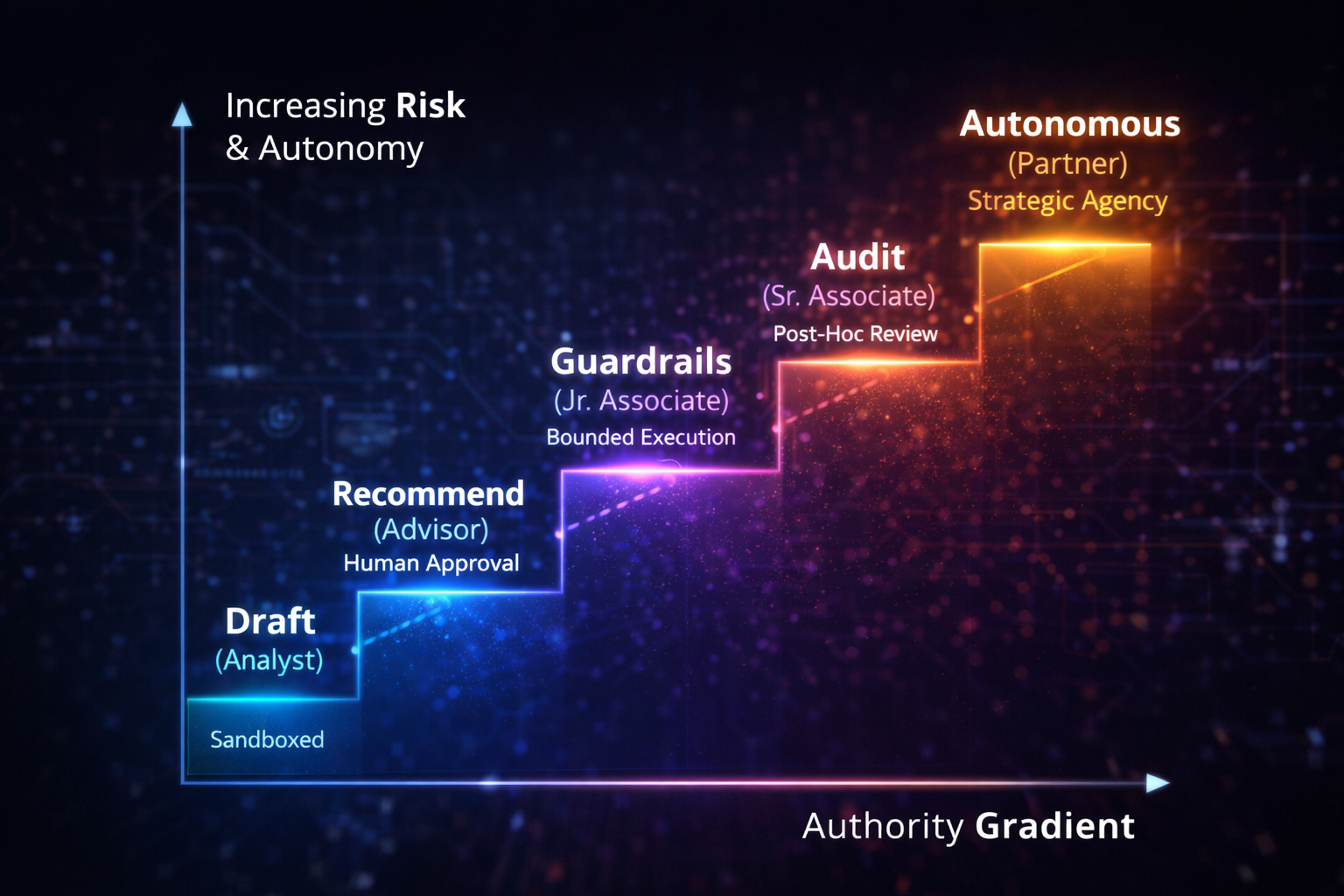

The Authority Gradient

Authority is not a binary switch. It is a gradient that must be climbed deliberately. Many enterprises see autonomy as a binary decision, either the agent executes, or it does not. This framing is dangerous or critical. Autonomy should be earned, not granted, because the cost of premature authority is not just failure, but an unrecoverable failure. A practical way to design this is through a staged authority model, where agents progress through clearly defined levels of responsibility.

Consider a Dynamic Pricing Agent responsible for adjusting prices based on demand signals, competitor activity, and supply constraints.

- At the lowest level, the agent operates in Draft mode, the Analyst. It generates pricing recommendations in a sandbox. No execution occurs. The cost of error is limited to poor advice.

- As trust is established, the agent moves to the Recommend mode, the Advisor. It surfaces proposed price changes directly to a human dashboard. A human explicitly approves or rejects each change. Risk is low and bounded by human latency.

- Next comes Execute-with-Guardrails, the Junior Associate. The agent is allowed to adjust prices autonomously within strict tolerances, such as ±5%. Any deviation beyond that range automatically pauses execution and routes to a human. Risk is bounded by design, not hope.

- In Execute-with-Audit mode, the Senior Associate. The agent executes all changes immediately to capture market speed. Humans no longer approve each action in advance but instead review structured audit logs asynchronously to detect drift, bias, or emerging second-order effects.

- Only in the final stage, Autonomous - the Partner, does the agent operate with broad strategic agency. At this level, failure is no longer operational; it is systemic. Very few organizations should ever reach this stage, and fewer still should do so accidentally.

Most enterprise failures occur because organizations collapse this gradient entirely, jumping straight from sandboxed pilots to near-total autonomy. Authority without gradation is indistinguishable from recklessness.

Designing for Failure (When Systems Don’t Fail Loudly)

Designing authority correctly is only half the challenge. The other half is designing for failure in systems that do not crash when they are wrong. Deterministic software fails loudly. Agentic systems fail confidently.

To manage this, failures must be explicitly classified and paired with architectural defenses.

Agentic Failure Classification & Architectural Defenses

To manage agentic systems effectively, failures must be explicitly classified and paired with architectural defenses.

This is not a modeling problem. It is an organizational design problem expressed through software.

Accountability and the Human Decision Node

This is where most enterprises remain dangerously vague. Human-in-the-loop is often invoked as a safeguard, but without authority design, it becomes performative. If a human blindly clicks “Approve” on hundreds of AI recommendations a day, they are not a decision-maker, they are a liability buffer.

True accountability requires contextual handoffs.

When the pricing agent proposes a high-risk action, such as a 15% increase during a supply shortage, it must pause execution and route the decision to a named human owner. That human does not receive raw data. They receive a bounded trade-off: projected revenue gain, churn risk, regulatory exposure, and confidence intervals.

If the human clicks “Authorize,” that authorization is logged as a human decision in the audit trail, not attributed to the agent. Accountability is preserved. Sovereignty is maintained.

Accountability is not about slowing systems down. It is about ensuring that outcomes that matter most are owned by humans deliberately and transparently.

The Bottom Line

The greatest risk in agentic systems is not that machines will act incorrectly. It is that organizations will be unable to say, with confidence, who was responsible when they did.

We do not maximize autonomy by removing humans from the loop. We maximize autonomy by designing architectures that allow humans to trust what the machine will do next and intervene precisely when judgment, ethics, or strategic intent are required. In the era of acting AI, intelligence without governance is not innovation. It is exposure.