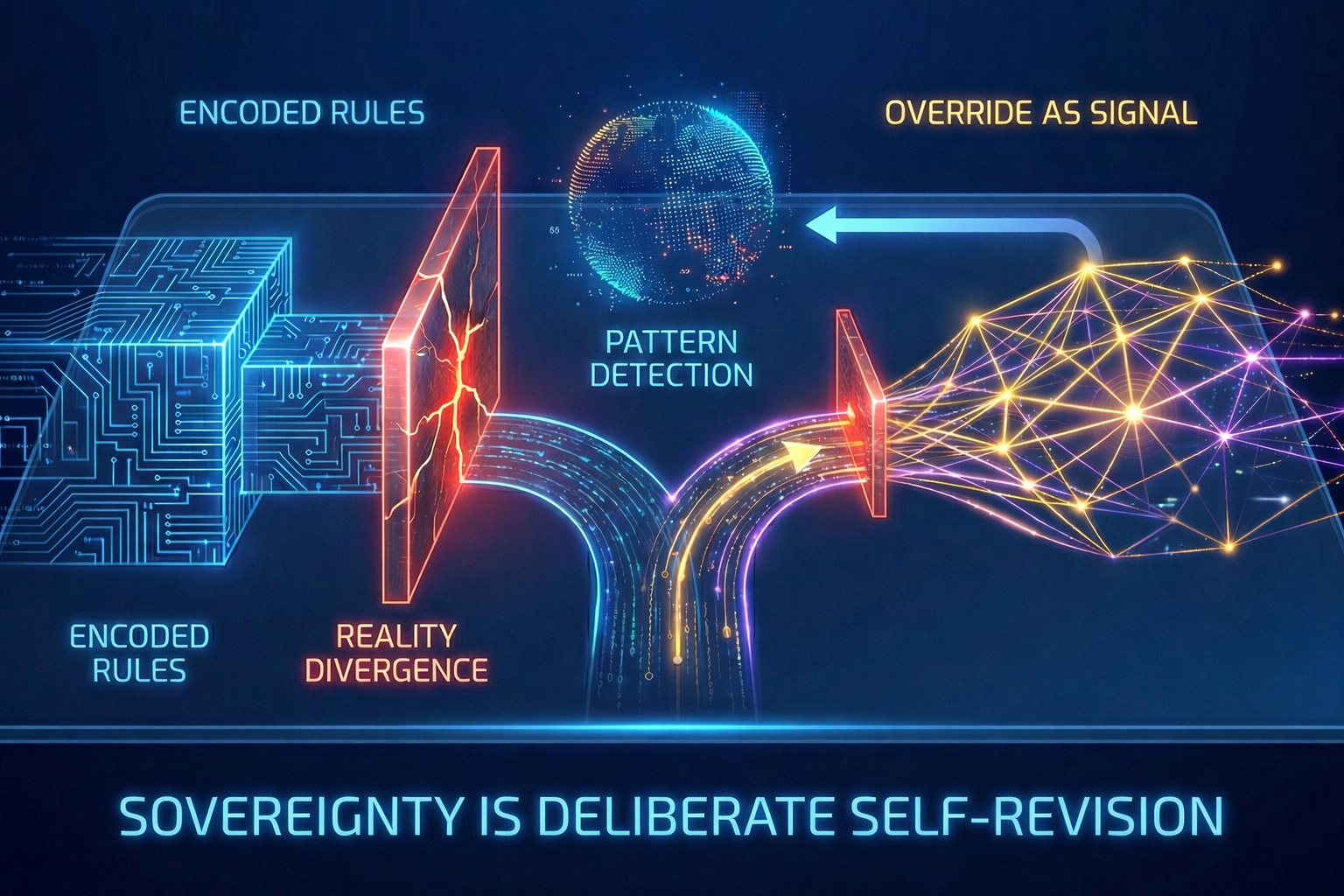

The biggest paradox of AI sovereignty is this: the more successfully an enterprise encodes its intent into enforceable logic, the more catastrophically it fails when that logic diverges from reality.

Perfect codification does not create resilient systems. It creates systems that fail confidently, at scale, without hesitation. Enterprises that survive the AI transition will not be those that eliminate exceptions, but those that design for legitimate override from the start, treating rule-breaking not as governance failure but as the main way systems learn.

This is uncomfortable territory. After months or years spent designing authority boundaries, encoding constraints, and earning stakeholder trust in autonomous execution, the last thing leadership wants to hear is that rules must be breakable. But the alternative is worse: systems that consistently and irreversibly execute outdated logic while the world moves on.

The question is not whether rules will be wrong, but whether the organization has designed a way to learn when they are.

The Illusion of Static Control

Most enterprises approach AI governance as if the goal is permanence. Policies are encoded. Constraints are deployed. Execution begins. The system is now "governed."

This mental model treats encoded logic like infrastructure, something built once and maintained periodically. But logic is not infrastructure. It is a hypothesis about how the world works, what the organization values, and which trade-offs are acceptable. Hypotheses have a half-life.

Markets shift. Customer behavior evolves. Regulatory interpretations change. Competitive dynamics force strategic pivots. Social norms reframe what was once acceptable risk. Rules that made sense six months ago may be wrong today, not because they were poorly designed, but because reality changed faster than consensus could update them.

When this happens, organizations face a choice. Either humans intervene to override the system, or the system continues executing bad logic at machine speed. Both options are failures if override was not designed as a legitimate, structured capability.

Enterprises that treat override as a deviation rather than data will optimize it away. They will measure success by how rarely rules are broken, incentivize teams to stay within bounds, and treat exceptions as evidence of poor training or weak governance. In doing so, they build sovereignty that cannot adapt.

Override as Information, Not Failure

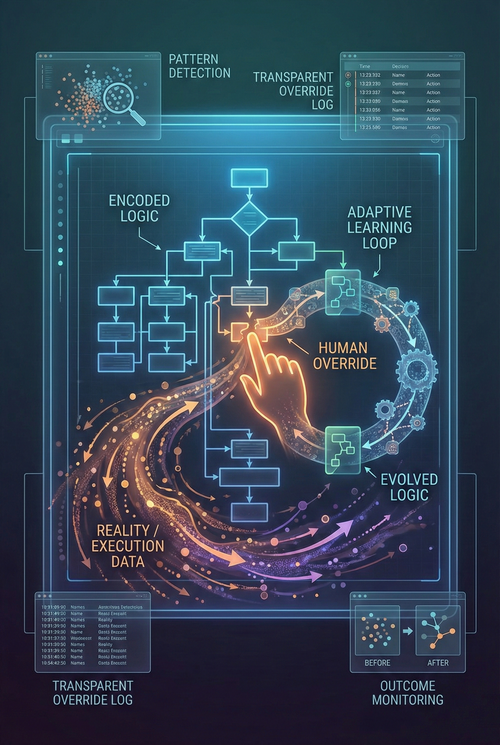

The critical reframe is to recognize that overrides are not violations. They are signals.

When a human overrides an AI system's decision, that action contains information: the encoded logic did not account for something the human considered essential. That might be the context the system lacked, a constraint the organization never made explicit, an edge case no one anticipated, or evidence that foundational assumptions have changed.

If overrides are treated as exceptions to be suppressed, that information is lost. If they are treated as learning signals, they become the means by which logic evolves.

This does not mean every override is legitimate. Some are gaming. Some are risk-taking disguised as judgment. Some reflect local optimization at the expense of global coherence. The challenge is not to eliminate overrides, but to distinguish those that reveal flaws in the system from those that reveal flaws in the override.

Legitimacy: The Test Overrides Must Pass

Not all rule-breaking is equal. Mature AI-native organizations establish explicit criteria for what constitutes a legitimate override.

First, visibility. Legitimate overrides are transparent. They are logged, attributed to a named decision-maker, and accompanied by reasoning. Overrides that occur invisibly through workarounds, shadow systems, or unreported manual intervention are not legitimate, regardless of the outcome. They erode trust and prevent learning.

Second, justification. Legitimate overrides are explained. The decision-maker must articulate why the encoded rule does not apply: novel context, changed conditions, conflict with higher-order values, or evidence that assumptions no longer hold. This is not bureaucracy. It is the substrate from which pattern detection becomes possible.

Third, reversibility. Legitimate overrides do not permanently bypass governance. They are exceptions, not new defaults. If the same override is necessary repeatedly, the problem is not a matter of local judgment; it is the rule itself.

Fourth, accountability. Legitimate overrides carry ownership. If the override results in a bad outcome, responsibility lies with the human who made the call, not the system or the process. This ensures overrides are taken seriously, not casually.

These criteria do not prevent bad overrides. They make them visible, analyzable, and subject to organizational learning, rather than hidden and repeated.

Pattern Detection: When Overrides Become Evidence

The most important capability in adaptive sovereignty is pattern detection across overrides.

A single override is a data point. A cluster of overrides around the same rule is a hypothesis: the rule may be wrong. When overrides concentrate in predictable ways by geography, customer segment, time of day, confidence threshold, or decision type, the organization has discovered something its encoded logic does not yet reflect.

This is where governance becomes evolutionary rather than static. Instead of treating clustered overrides as compliance failures, leadership treats them as evidence that reality has shifted and encoded logic must catch up.

Consider a pricing system operating under constraints designed for stable markets. When overrides spike in a specific region, investigation reveals that a competitor's aggressive entry has changed customer expectations. The constraint is not being gamed; it is being correctly identified as obsolete. The override pattern is not a governance problem. It is an early warning system.

Without structured override data, this insight arrives too late, buried in quarterly reviews or post-mortems. With it, the organization learns in near real time and can decide whether to update global logic, create regional exceptions, or investigate further before acting.

Authority Structures for Dissent

If overrides are information, then dissent must be designed into the authority structure, not treated as insubordination.

This requires a fundamental shift. Encoded rules are not commands from leadership that must be obeyed. They are the organization's current best understanding of how to act, open to challenge by those closest to execution.

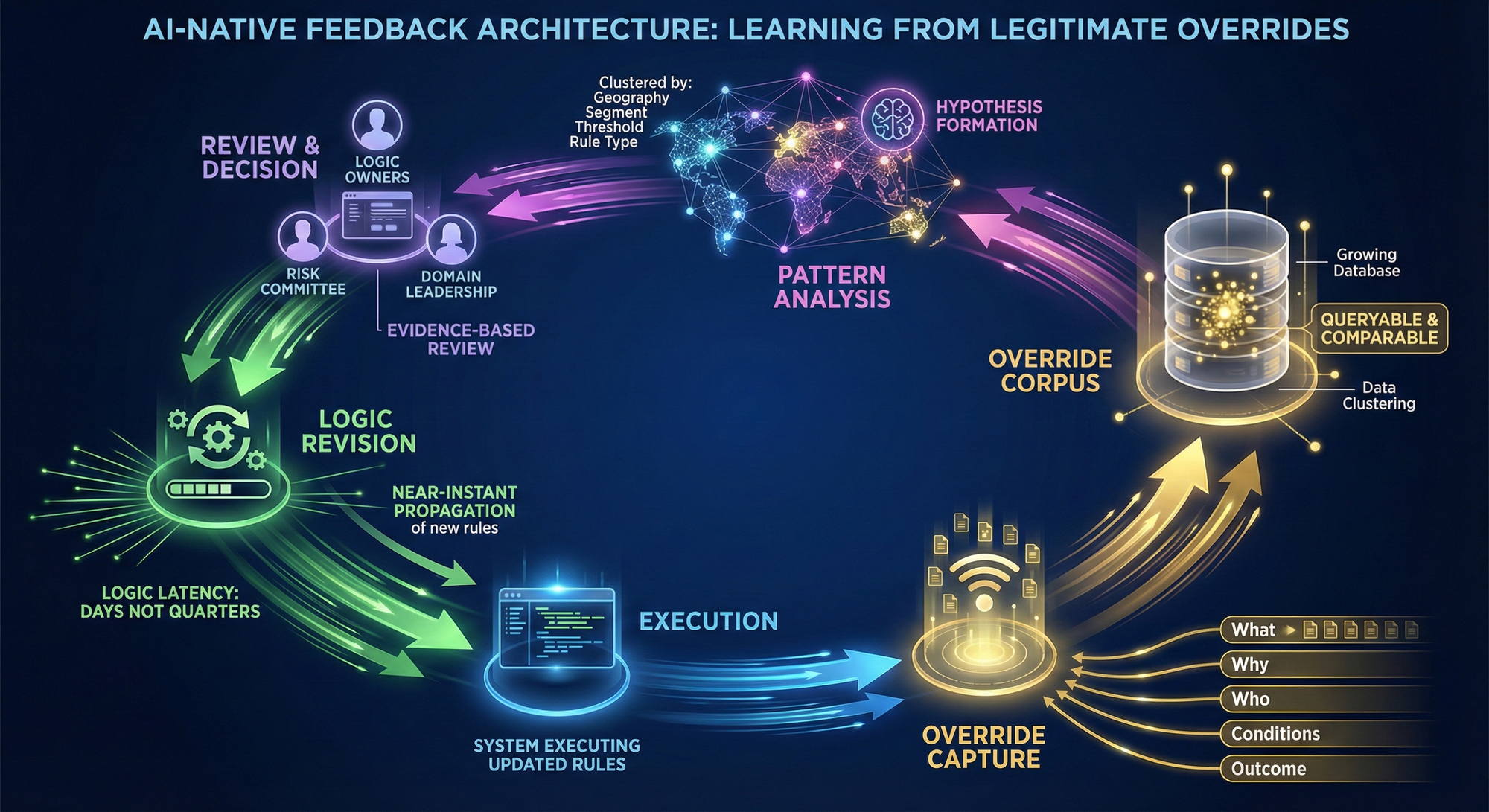

Mature organizations establish escalation paths not just for exceptions, but for rule revision. When someone overrides a constraint, they are also signaling that the rule no longer fits reality. That signal must travel. It must reach whoever owns the logic, such as data teams, risk committees, or domain leadership, and trigger a review process.

This does not mean every override triggers immediate rule changes. It means override data is treated as strategic intelligence, surfaced regularly, and analyzed for patterns that show encoded assumptions have expired.

Critically, the people authorized to override are not the same as those authorized to revise global logic. Local teams may have override authority within bounds. Central teams retain authority over whether local overrides become new rules. This separation prevents fragmentation while preserving adaptive capacity.

The Feedback Architecture: Turning Overrides Into Evolution

The technical and organizational architecture needed to make this work is more sophisticated than most enterprises realize.

It is not enough to log overrides. The system must make them queryable, comparable, and linked to outcomes. When an override occurs, the architecture must capture not just what was overridden, but why, by whom, under what conditions, and what happened as a result.

Over time, this creates a corpus of organizational learning: which rules are frequently overridden and why, which overrides produce better outcomes than the original logic, and which overrides are later regretted. This corpus informs the evolution of logic in ways no amount of upfront design can anticipate.

Feedback loops must be fast enough to matter. If pattern detection takes months and rule updates take quarters, the organization is still human-bound. The competitive advantage goes to enterprises that can propagate learning from override to revised logic in weeks or days.

This is not a reckless iteration. It is a disciplined adaptation. Changes to global constraints still require deliberation, testing, and stakeholder alignment. But the input to that deliberation, evidence from actual execution, is richer, faster, and more grounded than speculation.

The Paradox That Sovereignty Must Resolve

The hardest question is this: if rules are designed to be breakable, how does sovereignty survive?

The answer is that sovereignty is not the absence of override. It is the presence of a legitimate process around override.

In weakly governed systems, overrides happen invisibly, and accountability is diffuse. Power migrates to whoever intervenes, with no transparency or learning. In strongly governed systems, overrides are explicit, traceable, justified, and analyzed. Power remains structured even when rules bend.

The distinction is between designed flexibility and emergent chaos.

Enterprises that succeed will not be those with zero overrides. They will be those where override authority is explicit, override patterns are visible, and override data feeds back into logic revision predictably. Their sovereignty lies not in perfect rules but in their capacity to recognize when rules are wrong and evolve them deliberately.

What Leadership Must Measure

If override is a capability rather than a failure, then metrics must change.

Leadership should not ask how often rules are overridden. That question incentivizes suppression. They should ask what percentage of overrides are justified, transparent, and lead to system improvement.

They should track override concentration. Are overrides evenly distributed or clustered? Clustering suggests either local gaming or systematic misalignment between logic and reality. Both require intervention, but different kinds.

They should measure logic latency for override-driven updates. How long does it take for a pattern of overrides to trigger rule revision? This shows whether the organization is learning or ignoring its own signals.

They should also monitor outcomes: Do overridden decisions produce better results than encoded logic would have? If yes, the system is not being subverted; it is being corrected. If no, override authority may be too broad or poorly governed.

The Real Test of Sovereignty

The enterprises that survive the AI era will not be those that encoded the best rules on day one. They will be those that recognized their rules would be wrong, designed for that inevitability, and built systems able to learn from their own violations.

Sovereignty is not static control. It is the capacity for deliberate self-revision.

When logic fails, the question is not whether humans intervene. It is whether that intervention leaves the organization wiser, the system better aligned with reality, and authority still structured rather than eroded.

In the age of autonomous systems, sovereignty is not control, it is the discipline of learning faster than your rules decay.