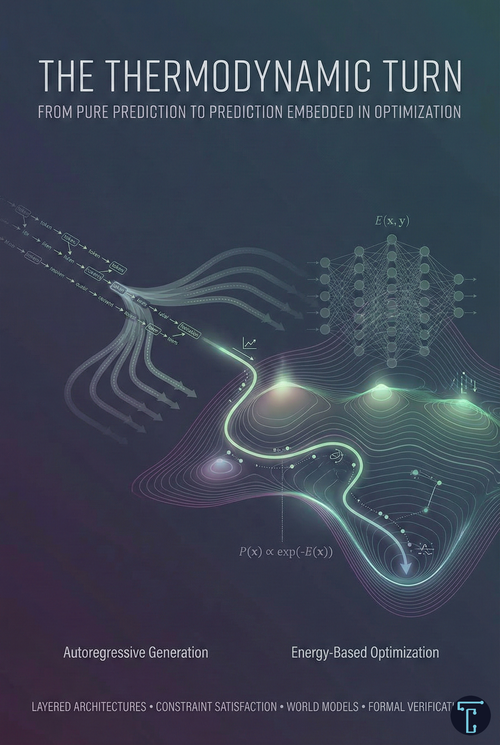

The Thermodynamic Turn: From Pure Prediction to Prediction Embedded in Optimization

The most significant capability gains in AI aren't coming from bigger models—they're coming from embedding prediction inside optimization. Generation is becoming a proposal step. The thermodynamic turn is already underway.