The Transition Already Underway

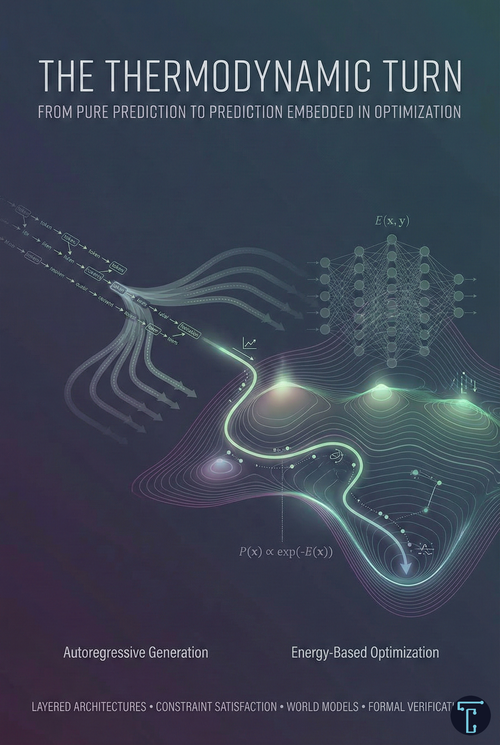

Something significant is happening in artificial intelligence, but it is not the paradigm shift that breathless commentary suggests. We are not witnessing the death of large language models or the triumph of a different architecture. What we are seeing is subtler and more consequential: the gradual embedding of autoregressive generation within optimization frameworks.

The future is not prediction versus energy. It is a prediction embedded in optimization.

Understanding this distinction matters because it clarifies where the field is heading, not where ideological debates might wish it to go.

For the past several years, the dominant paradigm in AI has been the autoregressive transformer—a system that treats intelligence as the ability to predict the next token. This framing has produced GPT-4, Claude, and their successors, systems capable of remarkable feats. Yet a growing body of research, led most prominently by Yann LeCun, argues that pure autoregressive prediction faces structural limitations for certain classes of problems. The alternative they propose—Energy-Based Models (EBMs)—offers a different computational framework better suited to constraint satisfaction, verification, and planning. The key insight, however, is not that one paradigm will replace the other. It is that they are converging into layered architectures where each plays a distinct role.

The Mathematical Distinction: What Is Actually Different

To understand why this matters, we must be precise about what distinguishes these approaches mathematically—and equally precise about where the distinction blurs.

An autoregressive language model factorizes a joint distribution causally. Given a sequence of tokens, the model outputs a probability distribution over the next token, samples from that distribution, appends the result, and repeats. The joint probability of a sequence becomes the product of conditional probabilities at each step. This decomposition is computationally elegant because each local distribution normalizes over a finite vocabulary, cascading into a globally normalized distribution without intractable integrals. The price of this elegance is structural: the model commits to each token before seeing future constraints. It cannot revise earlier decisions, only append corrections.

This is mathematically true—not a rhetorical flourish. Global constraints (logical validity, rhyme schemes, consistency with goals) are not enforced by the architecture. Any global coherence must be learned implicitly or simulated through additional machinery, such as prompting, chain-of-thought, or search. The architecture guarantees only that probabilities sum to one at each step; it provides no mechanism for ensuring that a proof is valid, that a plan achieves its goal, or that a sentence remains consistent with earlier claims.

Recent theoretical work has established that autoregressive models (ARMs) can be viewed as a special case of energy-based models. If you define the energy of a sequence as the sum of negative log-probabilities of its tokens, then the probability is the exponential of negative energy, and the global partition function equals exactly one by construction. The autoregressive factorization solves the partition function problem by constraining the energy to decompose into locally normalized terms. The insight is elegant: ARMs are EBMs with a very particular structure imposed.

Energy-Based Models, in their general form, offer a different formulation. Rather than asking "what is the probability of the next token given previous tokens," an EBM asks "how compatible is this entire configuration?" The model learns an energy function assigning scalar values to input-output pairs, where low energy corresponds to compatibility and high energy to incompatibility. EBMs evaluate whole configurations rather than prefixes. They naturally encode constraint violation. They need not distribute probability mass over nonsense—they can simply assign high energy to invalid states without committing to which invalid option is most likely.

Here is where precision matters: energy-based models and probabilistic models are mathematically dual in many cases. The Boltzmann distribution converts any energy function to a probability distribution, and vice versa. The real distinction is not between probability and energy, but between explicit normalization with causal factorization and implicit global scoring with iterative optimization. You can, and increasingly do, mix these approaches. The question is which computational framework best matches the problem's structure.

Why the Distinction Matters (When It Does)

The EBM framing captures constraint satisfaction more naturally than pure autoregression. This matters especially in domains requiring:

Planning and physical reasoning. An agent that must ensure its actions lead to a goal state benefits from evaluating trajectories rather than committing token by token. Energy minimization over action sequences is closer to how planning problems are formally defined.

Scientific discovery and multi-objective optimization. When generating molecules that must satisfy multiple simultaneous constraints, such as binding affinity, toxicity, solubility, and synthesizability, the ability to encode constraints directly into an energy function and search for low-energy configurations offers natural advantages.

Out-of-distribution detection. Autoregressive models with softmax outputs must assign probability mass among available options even when none are appropriate. EBMs can assign high energy to the input, signaling incompatibility without forcing a choice among bad options. This matters for safety-critical systems.

Formal verification. When correctness guarantees are required rather than statistical likelihood—proving that code meets specifications, that a system remains within certified boundaries—energy-based formulations align more directly with the underlying mathematical problem.

The analogy to mathematicians and chess players is not superficial. A mathematician checking a proof evaluates whether steps cohere, not the probability of the next symbol. A chess grandmaster assesses whether a position is winning, not the likelihood of each possible move. These are different computational problems: sampling from a distribution versus optimizing under constraints.

The Partition Function: Historical Bottleneck, Algorithmic Solution

The reason EBMs were historically impractical, and why this has changed, centers on the partition function. Converting energy to probability requires normalizing over all possible states, an integral that is computationally intractable for high-dimensional spaces. The history of EBMs is largely a history of algorithms designed to approximate or circumvent this intractability.

Contrastive Divergence, pioneered by Geoffrey Hinton for Restricted Boltzmann Machines, approximates the gradient of the log-likelihood using truncated Markov Chain Monte Carlo sampling. Score Matching, developed by Aapo Hyvärinen, sidesteps the partition function by matching the gradient of the log-density rather than the density itself, since the gradient of the normalizing constant vanishes and need not be computed. Noise Contrastive Estimation converts the generative problem into a discriminative one, training the model to distinguish real data from noise samples. Each approach contributed to the gradual expansion of what EBMs could accomplish, but each also had significant practical limitations.

Diffusion models represent the decisive rebuttal to claims that EBMs cannot scale. They train score functions across multiple noise levels and sample via annealed Langevin dynamics—gradually denoising from random noise to structured data. The key insight is that by starting with high noise (where the energy landscape is simple and unimodal) and cooling gradually (where the landscape becomes complex), the sampling process navigates to global modes without getting trapped in local minima. This solves the mixing problem that plagued earlier approaches.

The results speak for themselves: state-of-the-art image generation, coherent video synthesis, natural-sounding audio. These models generate outputs of unprecedented quality in high-dimensional continuous spaces, the domain where partition function intractability was supposed to make EBMs impractical. Anyone dismissing energy-based approaches as theoretically elegant but impractical is working from outdated information.

The implication is clear: the partition function problem is algorithmic, not fundamental. The question is no longer whether EBMs can scale, but rather how best to integrate them with other components in practical systems.

The Crucial Insight: Autoregressive Models Are Already Becoming Optimization Systems

Here is where many discussions of EBMs versus LLMs miss the forest for the trees. Modern autoregressive models are no longer pure next-token predictors. Through inference-time scaling and reinforcement learning from verifiable rewards, they become implicit optimizers.

Consider what Large Reasoning Models actually do. Systems like OpenAI's o1 and o3, DeepSeek's R1, and Google's Gemini Deep Think achieved dramatic capability jumps not by predicting tokens differently, but by:

- Sampling multiple reasoning trajectories

- Evaluating partial solutions against verifiable outcomes

- Backtracking implicitly through extended thinking traces

- Allocating more computation to harder problems

This is optimization behavior, not pure generation. From a functional perspective, the model is searching a space of thoughts using learned heuristics. On Competition Math benchmarks (AIME 2024), o1 achieved 83.3% accuracy compared to GPT-4o's 13.4%. In Codeforces programming competitions, o1 achieved 89.0% accuracy, while GPT-4o achieved 11.0%. These are not incremental improvements from better prediction; they represent qualitative capability jumps enabled by inference-time search.

The sharp contrast between ARMs and EBMs blurs considerably when you examine what inference-time scaling actually implements. The generation mechanism remains causal; tokens are still produced sequentially, but the system behavior is optimized over trajectories.

When you train with verifiable rewards (RLVR), you implicitly shape an energy function over reasoning trajectories. Low energy equals correct solutions; high energy equals dead ends. The model learns to navigate that landscape autoregressively, but the objective is global correctness rather than local likelihood. The reward signal backpropagates through the trajectory, shaping the model to prefer globally coherent reasoning paths even though it generates tokens one at a time.

This is already a thermodynamic move, optimization emerging within an autoregressive shell. The insight matters because it suggests the dichotomy between paradigms is less sharp than theoretical analysis might suggest. Autoregressive models are not reasoning systems in the architectural sense; they cannot enforce global constraints in one forward pass, but they can host reasoning processes. They can learn to simulate search, verification, and even backtracking internally. That distinction between architecture and learned behavior is critical for understanding where the field is heading.

The Thermodynamic Turn

The thermodynamic turn is gradual, integrative, and already underway.

LeCun's Vision: World Models for Physical Intelligence

Yann LeCun's critique of autoregressive generation focuses specifically on continuous data, such as video, and on problems that require causal world models. His argument is precise: predicting pixels is wasteful because the space of possible futures is high-dimensional and multimodal. A model trying to predict every visual detail must expend capacity on irrelevant information.

The alternative, Joint-Embedding Predictive Architecture (JEPA), projects inputs into an abstract latent space and predicts future latent states rather than observations. Training occurs through energy minimization: the model learns to assign low energy to pairs of representations that correspond to causally related states.

Recent theoretical work provides concrete foundations. LeJEPA establishes that the optimal embedding distribution for JEPA architectures is an isotropic Gaussian, with specific regularization achieving this in practice. V-JEPA demonstrates effectiveness for video understanding. VL-JEPA extends the framework to vision-language tasks. DINO-World Model shows how JEPA-based world models enable zero-shot planning in robotics.

This matters most for embodied AI—robots, autonomous systems, agents that must act in physical environments. The critique is not that LLMs are useless; rather, they address a different problem. "Produce plausible text" is not the same as "act coherently in a world."

The Ecosystem Approach: What Serious Practitioners Actually Believe

The most important corrective to paradigm-shift narratives comes from practitioners building these systems. The emerging consensus is not replacement but composition: an interdependent ecosystem where EBMs, LLMs, world models, and formal verification each contribute what they do best.

Energy-based reasoning is increasingly positioned to sit beneath generative AI stacks as a verification layer, not replacing language models but deciding what they are allowed to do. For mission-critical environments such as energy systems, industrial automation, financial infrastructure, and hardware design, this division of labor addresses a genuine need: markets that demand systems that can be certified and defended, not just optimized for performance.

This framing captures where the field is heading better than triumphalist narratives about paradigm replacement. LLMs excel at rapid generation, natural language fluency, and creative interpolation. EBMs excel at constraint enforcement, formal verification, and detecting when outputs violate specifications. Neither alone suffices for the full range of AI applications.

The mission-critical domain makes this especially clear. When software controls physical assets, financial risk, or human safety, the ability to prove correctness, not merely achieve high accuracy, becomes essential. Energy-based formulations provide the mathematical framework for such guarantees.

What Human Cognition Actually Suggests

A note of caution about cognitive analogies. The essay would be incomplete without acknowledging that human reasoning is not purely energy minimization.

Humans generate hypotheses sequentially. We commit prematurely. We rationalize after the fact. We use heuristics that violate clean optimization principles. Human cognition is messy, path-dependent, and often inconsistent. The dual-process theory, System 1 intuition versus System 2 deliberation, describes a division of labor, not two clean computational frameworks.

So while EBMs are normatively attractive for certain problems, they are not a perfect cognitive model. The lesson from cognitive science is that intelligence involves multiple interacting systems, not a single computational paradigm. This supports hybrid architectures, not paradigm replacement.

The Realistic Architecture

What does the future look like? Not a single paradigm triumphant, but a layered system where different computational frameworks handle different aspects of intelligence:

Fast autoregressive proposal layer. Language fluency, intuitive pattern completion, and rapid generation of candidate responses. This remains valuable because sequential generation is computationally efficient for many tasks, and humans communicate in sequences. LLMs will continue to serve as the interface layer, the part of AI that talks to humans, because they are extraordinarily good at it.

Energy-based evaluation layer. Consistency checking, constraint enforcement, and verification against specifications. This catches errors that generation alone cannot prevent and provides formal guarantees where they matter. When an LLM proposes an action, an EBM can verify that the action satisfies the required constraints before execution.

Search and planning loops. Iteration between generation and evaluation until low-energy states are found. Allocation of computation proportional to problem difficulty. Simple queries get fast responses; complex problems trigger extended reasoning. This is what inference-time scaling implements, and it will become more sophisticated.

Grounded world models. Especially for physical and embodied tasks, latent-space prediction that filters noise and captures causal structure. JEPA-style architectures learn to predict at the level of abstraction that matters for action, rather than at the pixel or token level. Robotics and autonomous systems need this; language tasks may not.

This is not "EBM replaces ARM." It is an autoregression becoming the frontend of an optimization system. The proposal layer generates, the evaluation layer filters, the search loop iterates, and the world model grounds. Each component contributes according to its computational strengths. The thermodynamic turn is real, but gradual and integrative, not revolutionary or replacing.

The Transition Already Underway

The core insight that intelligence cannot be reduced to fluent continuation is correct. Pure next-token prediction faces structural limitations for global constraint satisfaction, formal verification, and planning under uncertainty. Energy-based formulations capture these problems more naturally. The myopia of autoregressive models, committing to tokens before seeing future constraints, is a real architectural limitation.

But the framing matters enormously:

We are not moving from prediction to energy. We are moving from pure prediction to prediction embedded in optimization.

That transition is not coming; it is already underway. Inference-time scaling, RLVR, and hybrid architectures are being implemented within existing systems. The reasoning models of 2026 are functionally different from the language models of 2023, though they share architectural DNA.

The practical implications follow directly. For mission-critical applications—such as infrastructure, healthcare, finance, and autonomous systems—verification layers become essential. Energy-based reasoning provides the mathematical framework for guarantees that generation alone cannot offer. This infrastructure is being built now, positioning EBM reasoning beneath existing AI stacks rather than replacing them.

For general-purpose AI, the future is layered: fast generation, careful verification, iterative refinement. Neither paradigm alone suffices. The "thermodynamic turn" is real, but it is better understood as the emergence of an ecosystem than as the displacement of one architecture by another, an interdependent system where EBMs, LLMs, and world models each contribute what they do best.

The question is no longer whether energy-based approaches can scale or whether autoregressive models can reason. Both have demonstrated more capability than skeptics predicted. Diffusion models prove EBMs work at scale. Large Reasoning Models prove AR systems can implement optimization behavior. The theoretical debate has been superseded by empirical results from both camps.

The question now is how best to compose these capabilities, how to build systems that generate fluently and verify rigorously, that propose intuitively and evaluate deliberately, that predict locally and cohere globally. The mathematics exists. The algorithms are mature. The engineering challenge is integration.

That is the actual frontier. Not paradigm war, but architectural synthesis. Not replacement, but complementarity. The thermodynamic turn names something real: the recognition that constraint satisfaction, energy minimization, and global coherence matter for intelligence, not merely fluent local continuation. But the turn is gradual, integrative, and most importantly, already happening within the systems we deploy today.

What makes this moment pivotal is not that one paradigm will triumph. It is that we finally have the tools, the scale, and the understanding to build systems that combine their strengths. The future of AI is not autoregressive or energy-based. It is autoregressive and energy-based, layered appropriately for the task at hand. That synthesis is the work ahead.